The Top 7 Skills Needed to Become an AI Agent Architect

What you need to learn for a job in the Agent Economy of the future

How a Friday Night Bottle of Malbec is Changing What I Write About

Last Friday I spent the evening with my best friend, Gavin. We met at work 23 years ago, on a team building a retail intelligence system for a high street bank here in the UK.

Since then, Gav’s gone on to do an MBA and a PhD. He’s pretty much the smartest person I’ve ever met. Today he’s a senior systems architect at a major insurance company where he oversees all of the company’s IT delivery and has recently been given a small team via an innovation budget to start looking into “this AI Agent stuff”.

When he asked to drop by with a bottle of my favorite red in exchange for a couple of hours “talking shop”, how could I refuse?

What followed surprised me.

Gav wanted to know what kind of skills his little squad of innovation techies would need to progress some of the ideas they had been tasked to look into. After discussing the use cases, most of which involved document and image processing of one sort or another, we began talking about solution design principles and more specifically, design patterns.

It was at this point the discussion got very interesting.

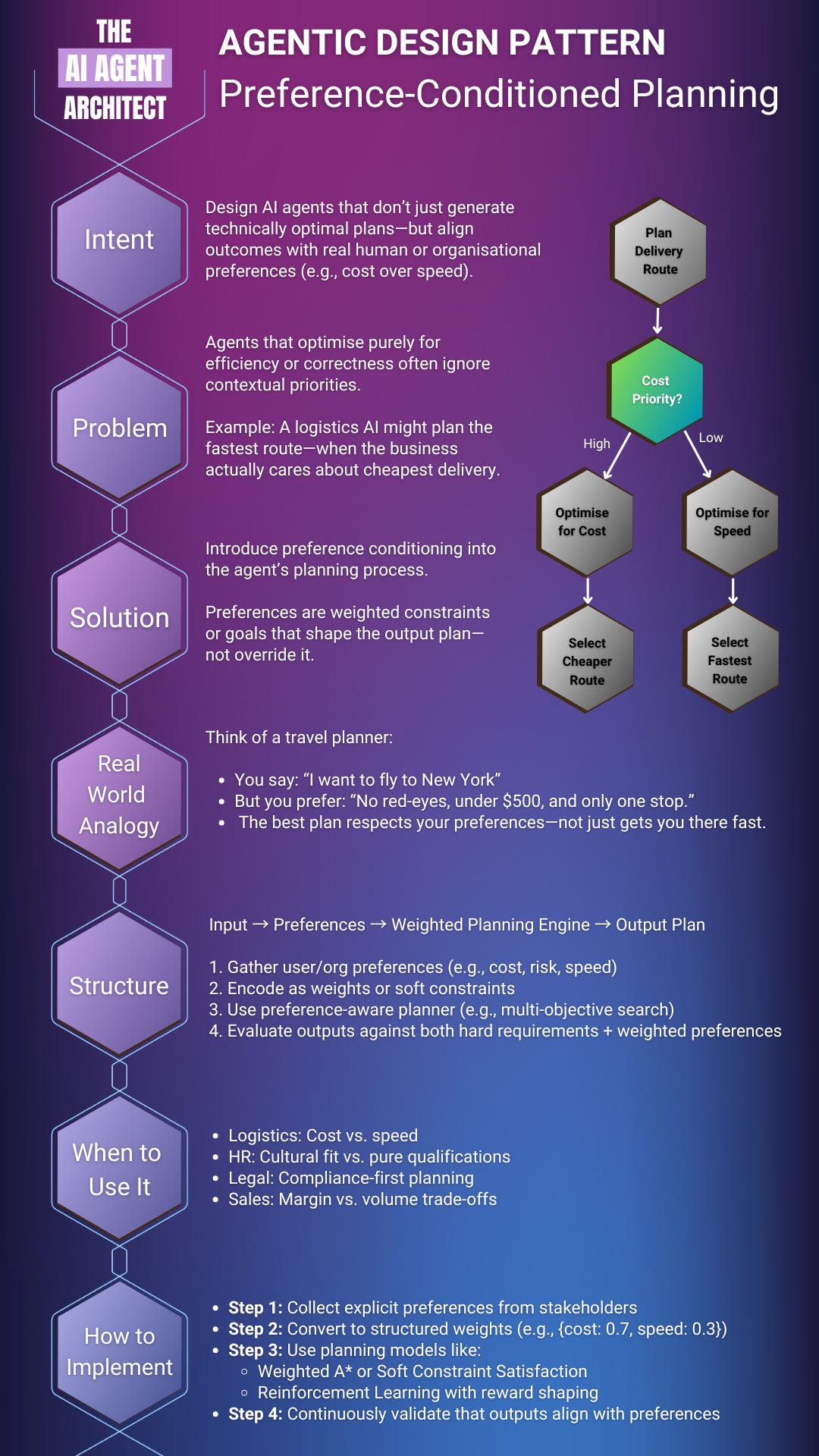

We started talking about these cute little diagrams plastered all over the likes of LinkedIn showing agentic “patterns”.

When Gav gave me his view on what the ReAct pattern was doing in the image above, he had a look on his face that suggested I’d just agree with what he said.

When I didn’t validate his understanding, he looked horrified.

I explained to him that the ReAct pattern was not a system, it was a reasoning style. The framing of the diagram was completely wrong.

At this point, I had a bit of a revelation. Cute animated pictures designed to get “likes” on social media can have a negative effect on those wanting to understand this new technology and who’s awareness is early stage. We both agreed that this was a very serious problem, especially from Gav’s perspective with the objective he’d been set of up-skilling his team to tackle some novel work in a new space.

These 7 skills are what I told Gav’s team to focus on. And they’re what I’d tell you to focus on too — if you want to build agents that survive outside the lab.

Let’s get into it.

Why the Cute Patterns on Social Media are Wrong

Before we get to the 7 skills I think you need to work in the field of Agentics, we need to start with patterns, not to dunk on the diagrams, but to appreciate how important patterns are.

I myself have a library of 37 patterns that I’ve developed and use when building Agent prototypes for my customers. I’ll show you the most useful one I’ve built at the end — and why I keep coming back to it.

Why do I have 37 and not 5?

Because my patterns are goal focused and not system focused.

Let me explain.

The diagrams floating around LinkedIn are popular for a reason: they look smart. They're clean, they have arrows, and they often name things like “ReAct Pattern” or “Toolformer Agent” — so you feel like you’re absorbing knowledge just by glancing at them.

But here’s the problem:

They’re not patterns. They’re oversimplified call sequences.

Take the “ReAct” example — the one my friend Gav brought up.

The diagram showed a box labelled “ReAct Agent” as if it were an entire system.

But it’s not. ReAct is a reasoning-style prompt pattern, not a complete architecture.

It’s a way of structuring LLM output so it interleaves thought and action:

Thought: I need more info.

Action: search[‘customer contract’]

Observation: ...

Thought: Now I understand.That’s it. It’s not memory. It’s not planning. It’s not tool orchestration. It’s a style of reasoning trace.

In my own agents, ReAct is one part of the loop, embedded inside a larger system that does things like:

Store and retrieve memory (so the agent can learn)

Make decisions about which tools to use and why

Reflect on whether the last action brought it closer to the goal

Decide if any follow up tasks are needed

Regenerate the plan if the current one isn’t working

Log output in a human-readable way for audit or follow-up

None of that is captured in the social media diagrams. They’re clean because they skip all the hard parts. Like error handling. Cost-awareness. Recovery. Fall-throughs. Context gating. Confidence scoring. The list goes on.

Real agent design requires thinking about state, intent, ambiguity, timing, trust, and long-term goals. That’s architecture. Not animation.

Those things don’t fit into four arrows and five boxes. But they are the things you’ll be asked about in a design review, a client workshop, or an incident report.

So, back to the question — why do I have 37 patterns, not 5?

Because the agents I build don’t live on whiteboards.

Now that you have the right framing, we can get on to the core skills that will get you that role working on agentic projects.

The 7 Skills You Need as an AI Agent Architect

1. Grounding Your Design Thinking in Goals, Not Tasks

This one shift changed how I approached everything when I first started.

Most developers design agents by asking: "What step comes first?"

That’s task thinking. And it works for automation.

But agents aren’t automations. They’re goal-seeking systems.

Real agent architects think in outcomes, not instructions. They ask: "What does the user want to achieve?" and then design systems that can figure out the steps themselves.

This means:

Accepting that the agent might plan differently than you would.

Designing the agent to break down ambiguous goals on its own.

Giving the agent autonomy to re-sequence tasks based on results.

In practice, this means writing prompts like: "You are a strategic analyst. Your goal is to deliver a summary report the CEO can understand without slides."

Not: "Summarise this in 3 bullets."

That shift opens the door to real autonomy.

2. Treating Memory Architecture as a Priority

The vast majority of people who are new to the field of AI and agents don’t know that LLMs are stateless. Without a sophisticated memory architecture built around it, ChatGPT wouldn’t “remember” anything. LLMs are Goldfish, and if you want to dive deeper into this challenge, here’s the material to explain it.

The ability to remember, reflect, and improve over time is what makes agents feel intelligent. This can only be achieved by designing and implementing the right memory solution to support the agent.

Here’s how I break it down:

Core Memory (Short-Term)

This is the immediate working context. The current goal, the last few steps, the conversation. It lives in the LLM prompt window and expires quickly.

Recall Memory (Medium-Term)

This is searchable. Past events, decisions, user preferences, cached outputs. Stored in a vector DB or structured lookup. Retrieved semantically, not just by keyword.

Archival Memory (Long-Term)

This is for traceability and growth. Stored outside the runtime loop. Logs, reports, reflections. Critical for learning and audit.

Designing this well means deciding:

What gets stored (not everything should).

When and how to retrieve it.

How to compress or summarise memory to avoid overload.

I used to think memory was a tech problem. Now I treat it like a storytelling problem: what’s the narrative arc of the agent’s experience?

My approach to designing memory for agents follows these principles. If you like to know more, I wrote about this here.

3. Master One Reasoning Loop Design

An agent that reasons once is a prompt. The real power comes from looping: Plan → Act → Reflect → Replan. This is the ReAct pattern and it’s the most common used in agentic solutions.

When I build a new agent, I use a pattern that follows these basic steps. As the design becomes more refined, I add steps as needed, but this serves as a pretty solid foundation.

The diagram above isn’t just a flowchart — it’s the cognitive spine of every agent I build.

Each box represents a thinking stage. Not a hard-coded step. And while an LLM might power the content in each stage, it’s not doing this in one-shot. It’s being prompted, steered, and sometimes overridden by the system around it.

Let me break it down:

Break Down Goal. The agent starts by interpreting the user’s request. What exactly are we trying to achieve? This often involves rewriting vague inputs into actionable sub-tasks.

Choose Tool or Plan Step. The agent reasons about what it needs to do next. This might involve using memory, calling a tool, or asking for clarification.

Execute or Query. The agent takes action. That might be running a search, querying a database, or triggering a document builder.

Analyse Result. It doesn’t just take the output at face value — it examines whether the result moves it closer to the goal.

Reflect and Store. This is where the agent makes itself smarter. It updates memory, records a journal entry, or adjusts future plans based on what just happened.

Then it loops back.

The LLM is used at many of these steps — but not all. And never in isolation.

Your job as the architect is to build the system that runs this loop safely, adaptively, and economically.

4. Knowing How and When to Ask for Help

No agent is perfect. Even the best-designed system will hit edge cases, stale data, broken tools, or ambiguity it can’t resolve.

The difference between a toy and a real agent? The real one knows when it’s out of its depth.

As the architect, your job is to embed “graceful failure” from day one. That means:

Throwing meaningful errors with human-readable summaries.

Writing to telemetry when plan steps repeatedly fail or exceed cost thresholds.

Setting up fallback behaviours: retry, rephrase, simplify, or escalate.

Giving the agent access to a “human-in-the-loop” pathway — a Slack channel, email report, or review queue.

I build all my agents with a request_human_help() step in the flow. It’s not always used, but it’s always there.

Why? Because asking for help is not a weakness. It’s how trust gets built.

The best agents don’t fake confidence. They escalate uncertainty.

That’s what makes them reliable — and actually usable in production.

5. Be Cognizant of Cost

Agents don’t run on magic.

They run on tokens, time, and tools.

The best agent architects think in terms of performance, asking questions like:

How many tokens does each reasoning cycle cost?

What’s the latency of each tool?

Is this loop burning time for no benefit?

This is all technology agnostic, and can be easily missed if we forget that architecting agentic solutions is as much about the cognition as it is compute.

In my own work, I use a cost tracker per agent run. If it crosses a threshold, the agent triggers a "budget exceeded" protocol — summarising and pausing for review. As the screenshot below shows, every interaction with an LLM is logged and costed when I’m testing.

I learnt the hard way to be cost-aware with agents. On one occasion I built a research and summarising agent that cost £92 in a weekend because I forgot to limit retries from web searches.

That mistake made me paranoid. And better.

If you’d like to learn more about Agent Economics, Part 4 of my Anatomy series will explain the importance of cost in more detail.

6. Explainability is Everything

It’s common for people to think explainability is about logs.

It’s not.

It’s about modelling understanding.

In traditional software, explainability means stack traces and debug messages.

But in agent systems, it means answering one key question:

“Why did the agent do that?”

To answer that question, your agent needs structure. And that’s where graph databases come in.

Why Graphs Matter

A graph is a map of relationships — not just raw facts. This example below shows how candidates for a job relate to the skills advertised in a job spec.

In this example, it shows not only what the agent knows, but how those pieces of knowledge connect:

A candidate has a skill.

That skill matches a job requirement.

That match is supported by evidence.

This structure allows the agent to:

Trace its decisions (“I ranked Bobs first because she used PyTorch at DeepMind.”)

Show reasoning paths (“From candidate → employer → project → technology → domain expertise.”)

Recover context when loops need to replan or when humans step in.

Explainability You Can Query

Using a graph database like Neo4j means your agent doesn’t just log decisions — it models them.

It builds a memory that:

Is persistent and queryable

Reflects real-world relationships

Can support multi-hop reasoning

This goes way beyond JSON dumps or chat logs. It gives you a living system of truth.

Clients trust agents when they can ask, “Why was this decision made?” — and get a structured, inspectable answer.

That’s why I say: if you want to build explainable agents, you need to learn what a graph is, when to use one, and how it changes what your system understands.

Explainability isn’t a layer you add at the end. It’s a structure you design from the start.

7. Don’t Use a Sledgehammer to Crack a Nut

Learning how to use tools is important. But knowing when not to use them? That’s a skill. Good agent architects know when to reach for a tool — and when to get out of the agent’s way.

In the early days, I overloaded my agents with every tool I could find — search APIs, scraping utilities, JSON transformers, PDF parsers. I thought more tools meant more power.

It didn’t. It just meant more things to go wrong.

Here’s the truth: tools are not features. They’re just ways to get a job done. The best tools are boring, invisible, and scoped to a specific purpose.

When designing an agent, I ask three questions:

What decision is the agent trying to make?

What minimal data does it need to make that decision?

What’s the cheapest, most reliable way to get that data?

That’s it. If the tool doesn’t help answer that decision — or adds more cost and complexity than value — it doesn’t belong.

Great agent architects:

Keep their toolset lean.

Prefer reliability over cleverness.

Give the agent enough information to move forward, not overload it with noise.

You don’t need to be a developer to get this right.

You just need to think like a strategist: What’s the outcome we want, and what’s the simplest, most stable path to get there?

Your agent is the decision-maker.

The tool is the assistant — not the hero.

Did You Notice What I Didn’t Include..?

At no point in those 7 skills did I hammer the point about you needing to be an expert in LLMs.

Weird?

In a world where every social media post pontificates about the latest release from OpenAI or Anthropic, I’ve hardly mentioned the AI.

Let me show you why…

Below is a high level model of my own framework. Notice the blocks in Blue on the bottom right? That’s the LLM part. The language model of an agentic system makes up 5% of the overall architecture.

The message I want to get across?

If you allow the noise on social media to influence you about LLMs, you’ll miss the 95% of the picture that will get you hired.

Bonus: A Real Pattern You’ll Use Repeatedly

This is easily the pattern I’ve used the most when building agents for real business use cases. It’s a total fallacy that an agent can give you the right answer without high degrees of relevant context to guide its decision making processes.

This pattern, and the need for it, is the perfect example of how “fully autonomous” agents are nothing more than fluffy unicorns!

In Closing

You don’t need to memorise 100 patterns or master every agent framework on the planet.

You just need to understand what makes an agent behave like an agent — and why most people get it wrong.

These 7 skills are the foundation. They’re what I use when I build agents that run real-world processes, generate real documents, and serve real clients. They’re what I shared with Gav’s team. And they’re what I’d share with yours.

Because the truth is this:

Most people are building bots with fancy coats.

A real agent makes decisions, adapts, remembers, reflects, and knows when to ask for help.

That’s not magic. That’s architecture.

And now, you know where to start.

Until the next one, Chris.

🧰 Whenever you're ready, I might be able to help you.

Ready to up-skill and transform your career in the AI economy?

Join our paid subscribers to unlock the complete AI Agent Business Translator Toolkit - 18+ frameworks, tools, and templates. 7-day free trial included. Visit the Toolkit page to find out what’s included.

Thank you for sharing this insightful piece. This is what everyone is talking about, and yet too little thinking is going on. Everyone is in such a hurry to reach some kind of results, but you’re so right about how most of it will be junk. Then we’ll be reading a ton of articles about how agents don’t work in the real world, and it’s all just hype. Not true, but it’s the thinking (by real human beings ) that’s going to make the difference. I’m already considering how I can put your skills into practice.

You know, I’ve been working on how to get more continuity and context in my workflows. As I was defining and refining what I wanted as context, I thought I was actually creating a full personality and that was maybe pushing it.

But so so fun.