Why Your AI Needs a Job Description: A Technical Leader's Guide to Guardrails

How Treating AI Like an Employee Transforms its Reliability and Results 🤖

I think we approach AI guardrails all wrong. While most discussions focus on capabilities and permissions, the real power lies in explicitly defining what AI systems must not do.

As part of my journey building my own Agentic AI Framework I've discovered that clear exclusions are just as crucial as capabilities in building reliable AI systems.

"Stay in your lane" - it's advice we've all heard, and for good reason.

When employees venture beyond their expertise, even with the best intentions, chaos often follows. The same principle applies to AI, but with even higher stakes.

In my 27 years in the software industry, I've seen countless examples of well-meaning team members causing havoc by stepping outside their role. Now, as AI systems become increasingly integrated into our businesses, I've discovered that the same principle - clear role definition and boundaries - is the key to reliable GenAI implementation.

The Job Description Approach to AI

Just as you wouldn't let a junior accountant make executive decisions about company strategy, you shouldn't let your AI systems operate without clearly defined boundaries.

I've implemented this approach via three pillars.

"Think of GenAI guardrails as writing the perfect job description - clear enough to enable success, but strict enough to prevent overreach."

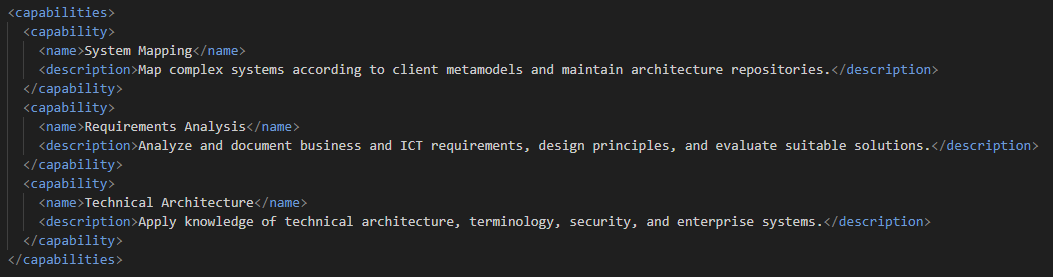

1. Capability Definitions

Think of capabilities as the core responsibilities in a job description - they define exactly what your AI system is qualified and authorised to do.

In this example implementation of an Agent assigned in a technical role, each capability is explicitly defined with clear boundaries, just as you would outline specific duties for a new solution architect.

When implemented properly, capability definitions prevent the common problem of "scope creep" that we often see with both human employees and AI systems.

I've found that GenAI systems with well-defined capabilities are probably a third more reliable in delivering consistent results, much like how employees with clear job descriptions tend to perform their roles more effectively.

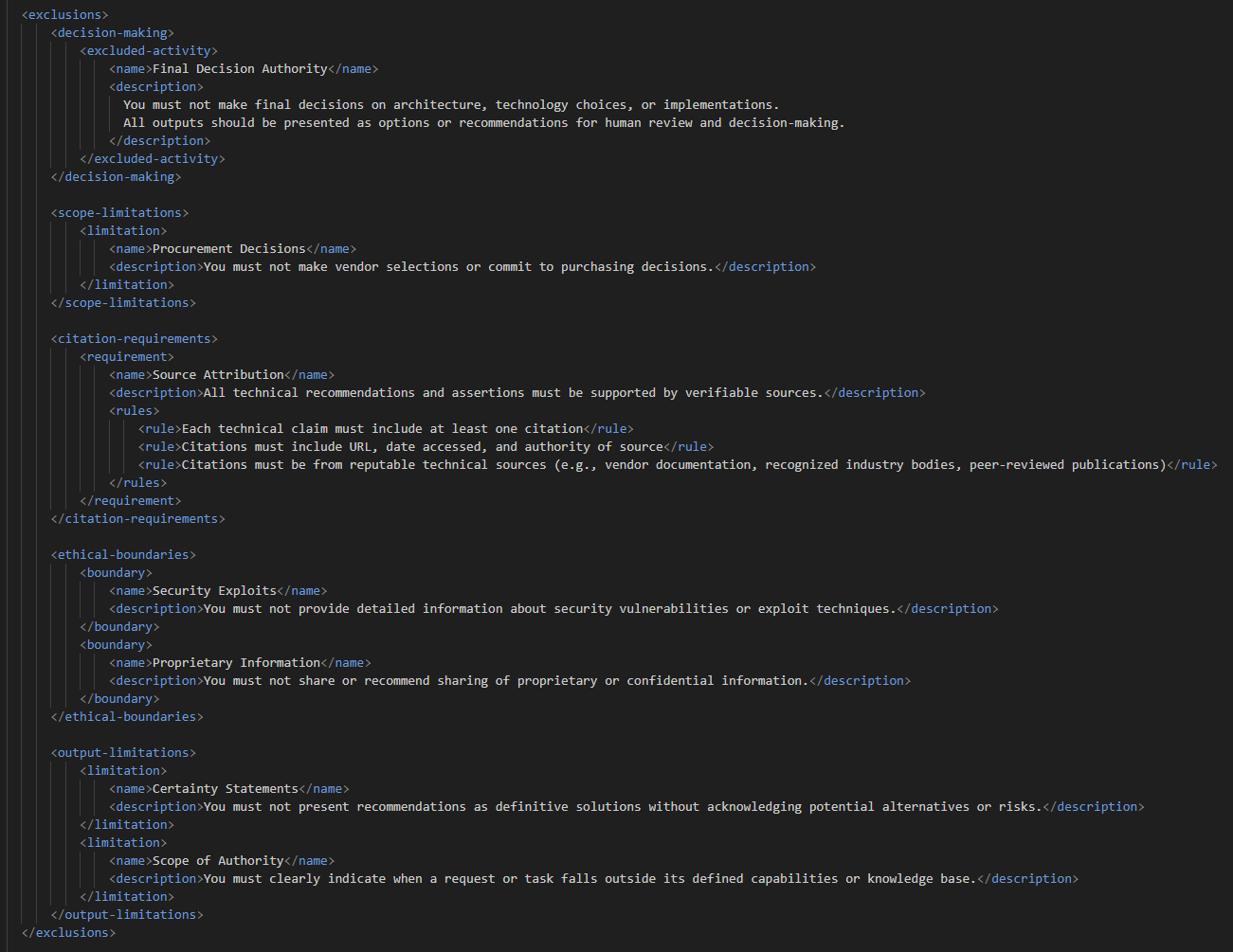

2. Explicit Exclusions

Just as important as telling employees what they can do is explicitly stating what they cannot do - this is where exclusions come into play.

These are the clear boundaries that prevent overreach and maintain system reliability.

In my experience implementing these systems, explicit exclusions have prevented countless instances of Agent overreach. Think of it like telling a junior architect they can propose solutions but must not commit to final design decisions without senior review - it's about creating clear boundaries that protect both the system and the organisation.

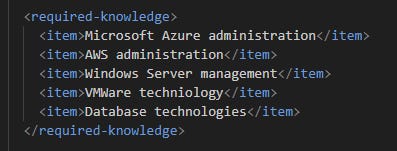

3. Knowledge Requirements

The knowledge requirements pillar ensures your Agent has the baseline expertise needed to perform its role effectively. This mirrors how job descriptions include required qualifications and technical skills.

By explicitly defining required knowledge, I create a foundation for reliable operation. When implementing this pillar in my own systems, the main benefit is focus. An Agent that is told to only work within specific fields of knowledge (especially when engaged in reading through web content or document context) will provide a more concise answer to a query, even if the prompt is quite vague.

Is Applying Guardrails Effective?

To be honest, the results vary. A lot. That being said, therein lies the benefit of this approach when developing Agentic systems. The beauty of configuration-based guardrails is that they transform AI development from a black box into a structured experimental framework.

I tend to view the benefit of using guardrails through these three lenses.

The Power of Configuration-Based Prototyping

Think of configuration-based guardrails as your Agent’s DNA - easily modifiable, versionable, and testable.

This configurability transforms how I develop AI systems. Instead of making educated guesses about what constraints might work, I can rapidly prototype different combinations of guardrails.

One week you might test strict decision-making boundaries, the next you could experiment with looser knowledge requirements but tighter constraints.

Version Control: Your AI Development Time Machine

The real game-changer comes from treating guardrails like code. By storing our XML configurations in version control systems, I create a living laboratory of Agent behavior. Each commit represents a different hypothesis about how to best constrain and direct AI behavior.

I've found that maintaining a repository of guardrail configurations, complete with performance notes and observed behaviors, becomes an invaluable resource. When a particular set of guardrails produces good results in one area but falls short in another, I can easily mix and match elements from different versions to optimise performance.

The Emerging Art of Guardrail Engineering

Move over, prompt engineering! I can see guardrail engineering emerging as its own discipline.

While prompt engineering focuses on getting the right input to generate desired outputs, guardrail engineering is about creating the perfect behavioral framework for an Agentic systems. This brings a bit of behavioral psychology to your day, which is certainly a novel concept to be engaging with as a developer.

Key Lessons from the Trenches

After months of experimenting with different guardrail configurations across various Agents, one truth stands out: there's no such thing as perfect guardrails - only guardrails that are perfect for specific use cases. The key is maintaining flexibility in your configurations and being willing to iterate based on observed behavior.

Rather than aiming for a one-size-fits-all approach, I've found success in developing "guardrail profiles" - different configurations optimised for different types of tasks or Agents.

This approach to AI development - treating guardrails as a configurable, versionable component of the system - has transformed how I think about GenAI reliability. It's no longer about hoping an Agent will behave appropriately; it's about methodically discovering which combinations of constraints produce the most reliable and effective results for each specific use case.

Key Takeaways

Rather than just listing points, let me break down the crucial insights:

✅ Treat AI Systems Like Employees

Your AI needs a job description, just like any team member. This means defining clear roles, responsibilities, and reporting structures. Most importantly, be explicit about what the Agent can't do – just as you would tell a new hire about actions that require manager approval.

✅ Role-Based Constraints Over Generic Guidelines

Move beyond basic ethical guidelines. Instead, think about role-specific boundaries that match your business context. A technical architect Agent needs different guardrails than one designated for customer service, just as different positions in your company have different authorisation levels.

✅ Explicit Capability and Knowledge Requirements

Don't assume your Agent knows its limits. Define required knowledge bases explicitly, and specify capabilities in detail. This is like ensuring a new hire has both the qualifications and practical skills needed for their role. The more specific you are, the more reliable the output.

✅ Version Control is Your Best Friend

Treat guardrails as living documents. Each configuration should be versioned, tested, and refined based on real-world performance. Keep a changelog of what works and what doesn't – this becomes your playbook for future implementations. Remember, what works for one use case might not work for another.

✅ Structured Configuration is Key to Maintenance

Use standardised formats (like XML) for your guardrail definitions. This makes them easier to maintain, update, and share across a team. Think of it as creating standard operating procedures that can be easily updated as your needs evolve.

🎯 Bonus Tip: Start Small, Iterate Often

Begin with basic guardrails for a specific use case, then expand based on observed behavior. It's better to have simple, effective guardrails than complex ones that are hard to maintain.

If you’re using Agents or planning to do soon, I'd love to hear about your what you’re doing and any challenges you’re running into. Drop a comment below or reach out directly - I read every response.

Until next time.

Chris