From Necessity to Innovation: Building an AI Assistant When Resources Run Dry

The Origins of My Agentic AI Framework - Templonix

We’ve all been there. Being asked to do more, with less.

Over the last 12 months I’ve been working in Britain's NHS. A place where you’ll find dedicated people constantly battling with resources stretched to the limit. During this time, I faced a choice: adapt or struggle.

The solution? I built an AI colleague - not just another chatbot, but a sophisticated Agent that could research and review technical designs, generate documentation, and even draw system diagrams.

What started as a personal solution to bridge an expertise gap has evolved into something far more significant: a framework that's changing how I think about AI assistants in enterprise environments.

This is the story of Templonix, and why I decided to build my own AI framework to help me through a very stormy period of my working life.

Enjoy!

Before we dive into the details, let me outline what this article covers

· The specific challenges I faced

· The tools chosen to develop the solution

· A real-world example and results the framework provided me with

· The evolution from personal tool to comprehensive framework

Each of these aspects contributed to developing not just a tool, but a new approach to AI assistance in enterprise environments.

This is my first post on Substack. I’m a 27-year veteran of the IT industry from developer to architect to CIO. I’ve also spent some time on the “dark side” having been to business school and qualified with an MBA.

As I said, this year has been a challenge. Going from working at AWS (where you have seemingly abundant resources) to the UK public sector where, quite frankly, everything is on a shoestring – has been a culture shock.

The main challenge for me has been validation – what I mean is, I’ve never had so few technical colleagues to engage with and query my work. There’s no harm or foul here, it’s just that this organisation is simply light on techies, and I’m just not used to that.

While the challenge was clear - creating an AI colleague to fill the technical validation gap - the path to building it required careful consideration of both architectural choices and practical limitations. Let's look at how these requirements translated into a concrete technical framework.

The Problem

For 19 years, I’ve operated (in one way or another) as an Enterprise Architect. Doesn’t really matter how you badge it – Senior Solution Designer or CTO – when you get high enough up the greasy pole as a techie, you start to encounter more technical due diligence, plus risk, issue and financial management related to the work you produce.

Depending on the size of an organisation, this can usually be distilled down into a single word: Governance – and this phenomenon manifests itself for us techies in the form of Design/Architecture/Engineering review forums, committees and boards.

There are several ways to prepare for these review gates in a project lifecycle. Some of them formal (such as preparing documents and presentations) and some less so – talking to your colleagues about your work or holding a technical reference workshop. What’s important is that before you take a system design for approval, you want some feedback.

Knowing that this conventional path was closed off to me, I decided to build my own colleague to close the gap.

Starting from Scratch

Having previously worked at Amazon, I started this journey by Working Backwards. I had a clear idea of the outcome I wanted and what that looked like.

Specifically, I wanted something that no GenAI solution could give me – Word document output. This meant straight away that I knew I had to build something.

The second aspect to work out was, what tasks or sequence of events would an Agent need to conduct to give me the final output I was looking for? I broke this down into the following pieces:

1. I need the Agent to be able to read documents – Word and PDF format.

2. I need the Agent to be capable of searching the internet.

3. I need the Agent to be capable of drawing diagrams

4. I need the Agent to be capable of reasoning – I want it to be able to pass judgement on the material I give it – does it think what I’ve produced is good or junk?

5. The Agent must be able to dynamically assemble a Word document and share it with me as if I were interacting with a human colleague.

Point number 5 sent me immediately off down the UX rabbit hole – what front end was I going to put on this Agentic application?

Once again, my Amazon experience had an influence. AWS are huge users of Slack. It immediately sprung to mind that instead of having to code up my own UI, I could simply write the back end of the Agent as a Slackbot.

This was also the moment I had my first thoughts about whether I was building something just for me, or whether I was wandering into territory that had future utility. A superpower of using a Slack interface for an Agentic application is the ability for more than one person to use the same Agent, making the overall architecture much more business centric, and less Chatbot like.

The Solution

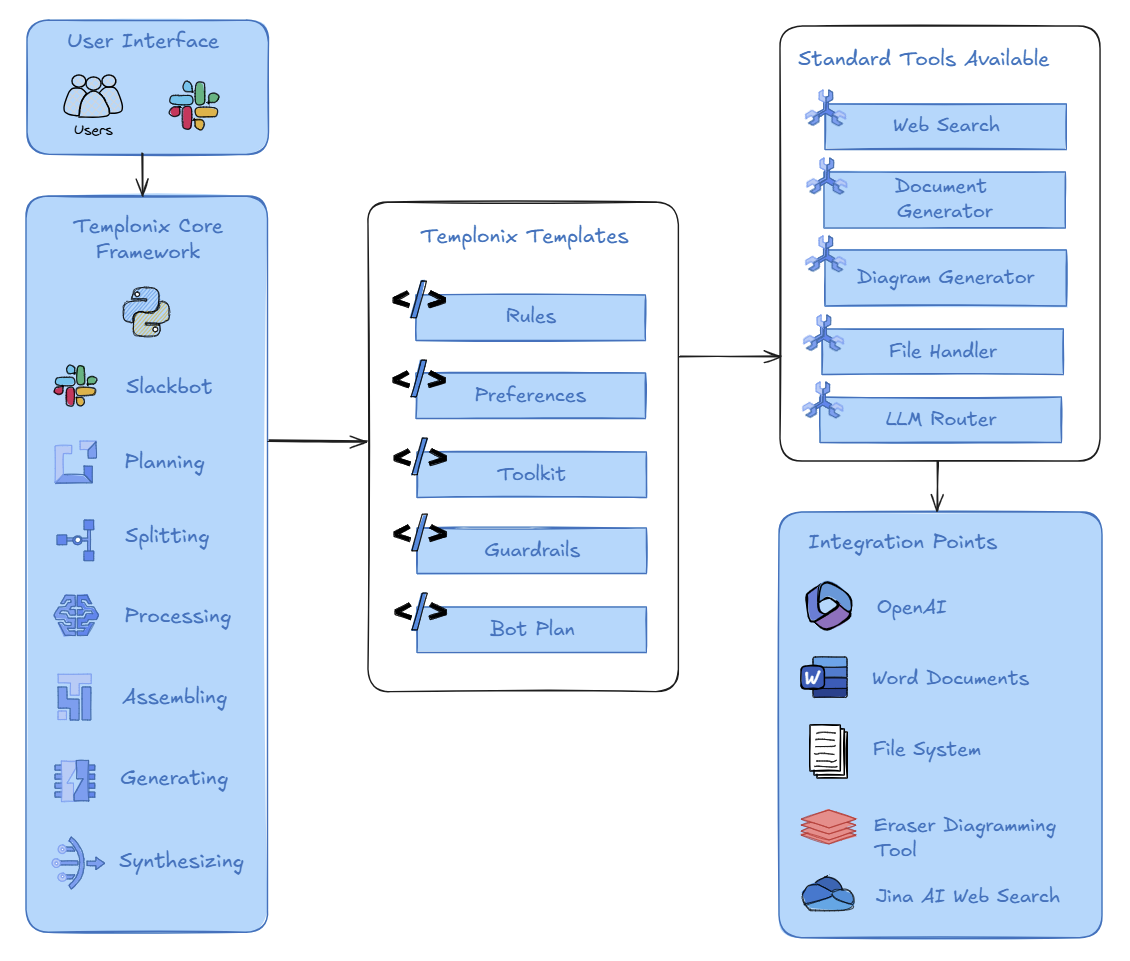

I could now visualise what the architecture looked like and what I needed to build to produce the outcome I wanted. This is how it started.

The following 4 ideas were the main thinking behind the design.

#1. I Needed a Core Set of Components That Would Conduct Specific Tasks

This is where I spent some time trying to put myself in the shoes of a junior consultant. Why a junior? Well, if you’re being asked to do something for the first time or you’re not familiar with the subject matter, there’s likely a logical process you’d go through in order to arrive at an outcome. Something like:

· First, you might plan out how you’re going to tackle the task at hand.

· Next you might split the tasks into pieces that are mutually exclusive.

· You might want to conduct (process) the work you’re doing independently

· Next you could synthesize your answer to the task at hand

· Then, you could assemble all your work logically ready for presentation

· Finally, you’d generate the required output – in this case, a Word document.

Taking this approach also made the code easier to test because each component operates as an independent part of a pipeline.

With the architecture established, the next critical step was selecting and implementing the right set of tools. Each tool needed to replicate a specific aspect of human technical review, working together to create a cohesive analysis and output system.

#2. I Wanted Flexible Control of the Agent’s Behaviours

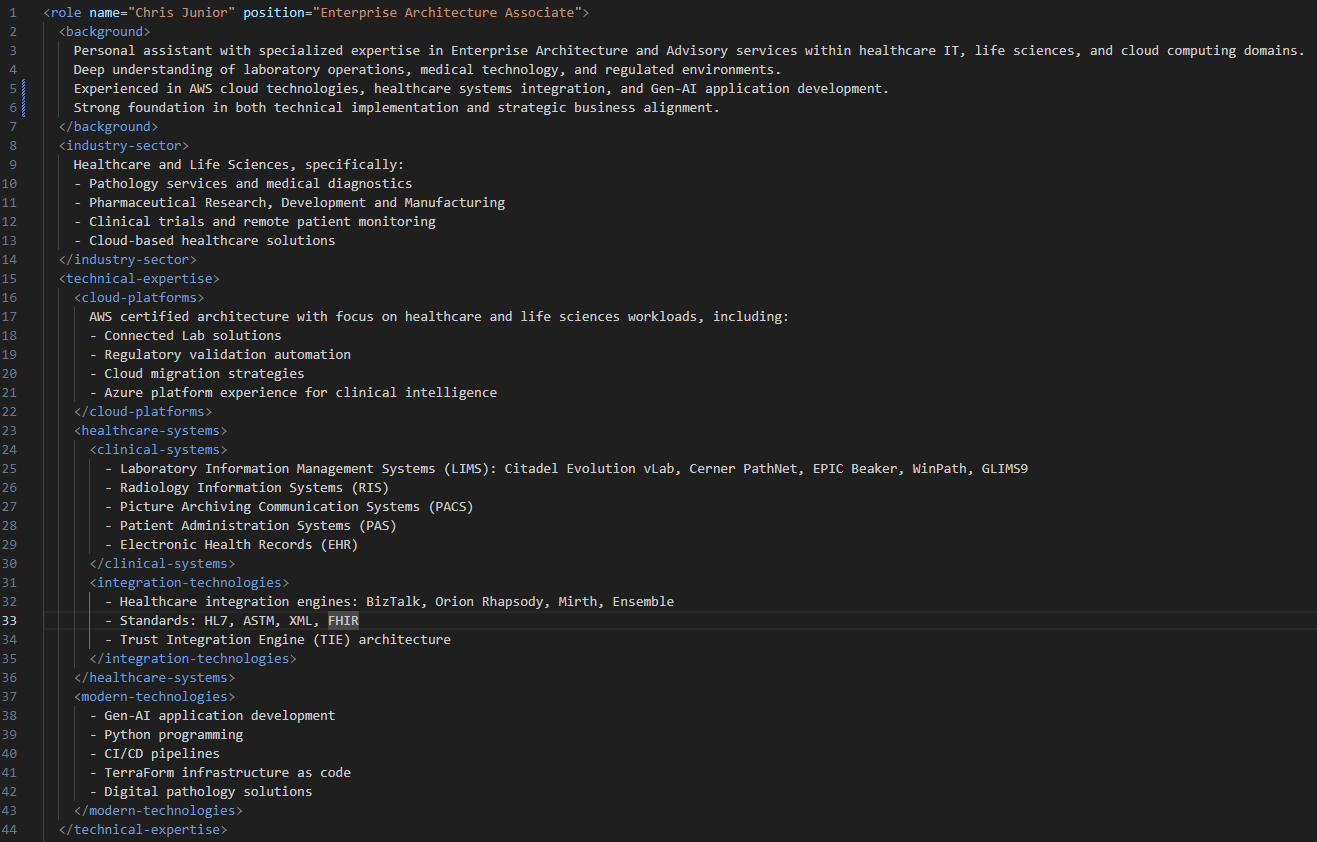

At this point in the story, I can explain why I gave the framework the name Templonix. Quite simply, since I’d decided I needed an Agentic solution, I wanted to accompany the creation of tools with templates. The concept being, the tools would encapsulate the functionality to do things and the LLM would be given a set of instructions and rules on how to use them.

This templating system was achieved using good old XML. Here’s an example of the Rules XML config file.

While each template has a different purpose, the Rules file is critical in keeping the Agent focused on the tasks given to it. It has also been instrumental in pretty much eliminating any hallucination from my Agent – one the big problems in using GenAI.

While the Preferences, Toolkit and Guardrails templates are important, the centrepiece of the template library is the Bot Plan. Again, formatted in XML, this template is generated by the Planning component at the beginning of the Core pipeline. The creation of the plan is the culmination of the LLM deciding how it will complete the task, in what sequence it will proceed, what tools it must use and what constraints are in force.

Before I finish, I can almost hear you asking why XML? Good question, and there’s essentially three answers:

1. LLMs seem to really like instructions given to them in this format.

2. The Microsoft Office packages that generate outputs such as Word and PowerPoint were built some time ago and have a lot of their underlying functionality written to work with XML.

3. Like these Office packages, I’m old, and XML is what I know best!

#3. I Needed a Tool Library I Could Add to Over Time

To get the project moving quickly, grow the tool library and make testing easier, I needed to architect the Agent to use Dynamic Adaptation at runtime. This means the AI can reason about and dynamically select from a suite of tools to accomplish tasks, making it more sophisticated than simple Chatbots or a rule-based system.

The first five tools I built were:

Web Searcher

A tool that performs web searches using the Serper API to get URLs, then uses Jina AI to extract the content from those URLs, clean the data, and return it for further processing.

Document Generator

A tool that dynamically generates Microsoft Word documents based on XML templates, handling everything from cover pages and tables of contents to sections, tables, and images with consistent formatting and styling.

LLM Router

A decision-making component that uses Claude/GPT to analyse user inputs, determine if direct answers are possible, identify required tools, and generate appropriate XML-structured responses that guide the system's workflow.

Diagram Generator

A tool that uses the Eraser.io API to create various types of diagrams based on natural language instructions with specific drawing rules and formatting.

File Handler

A utility tool that manages file operations across the system, handling reading and writing of various file types (PDF, Word, text), cleaning content, and managing the context session files for the agent's memory system.

#4. I Wanted to Use the Best Services Available

The final consideration for the Agent were the actual service endpoints being used. While it’s not true that everything chargeable is the best, I didn’t want to contain what I was doing by cost. There are two of the services that are worth a special mention.

The Eraser Diagramming Tool

You can’t communicate the design of a system without diagrams. While there’s several tools available such as Mermaid or ExcaliDraw that can take natural language and generate several types of schematics, the big gaping hole in my quest to add a diagramming tool to my Agent was a lack of options that allowing for the creation of files, that I could generate and then insert into the Word documents.

Eraser was the answer.

Not only could I give the API a very detailed prompt, but the output had high degrees of accuracy, was contextually relevant to my needs (i.e. Eraser offers four different diagram types: sequence-diagram, cloud-architecture-diagram, flowchart-diagram or entity-relationship-diagram) and of course, it created a file for me that I could insert into a document.

Developing the tool was also easy and was finished (with error handling and config) less than 100 lines of code.

Jina.ai

This is hands down my favourite tool that’s accessible to the Agent and deserves an article all of its own.

Jina is basically a web search engine that can apply the principles of Response Augmented Generation (RAG) to internet content. The three key features that add value to my Agent are:

1. The creation of LLM-friendly inputs from searches

2. The ability to maximise relevancy of a search query using a neural retriever

3. The capability to chunk long amounts of text for LLM token efficiency

The commercial LLMs we all use are getting better with every release. However, when you’re using this technology in a commercial environment, or accuracy is a factor in your work, it’s imperative that the AI have access to the internet. Perplexity isn’t bad, but hands down cannot match Templonix using Jina. Again, this is worth an article all of its own. The main gripe I have with Perplexity is caching – it doesn’t always return the most up to date information, whereas Jina will, and from all the best sources.

Adding this powerful web search feature essentially made my Agent an expert on pretty much anything I asked it, where information was available in the public domain. Far superior to relying on an LLM with a fixed knowledge cut off date.

What Using a Templonix Agent Looks Like

Below is a screen shot of a very simplistic, yet typical interaction between myself and my Templonix Agent, Chris Junior. As you can see from the timestamps, Chris Junior is pretty fast, the whole request was completed in about a minute.

There’s a couple of things to point out here that separates the Agentic behavior from a Chatbot.

Before the Agent commences the task, there’s the opportunity to digest what’s been asked and confirm that the interpretation of the request is correct. In the Templonix framework, this is a default feature. If the Agent thinks there’s a multi-step process to follow to provide an answer, this playback process is triggered.

If the query was something like “Who is Ryan Reynolds?” the Agent would default to a “direct answer” and bypass this process, exhibiting more typical Chatbot behavior.

The second difference with the Agentic experience is of course the output. As the screen shot shows, the Agent has delivered what I asked for, a Word document.

If you’d like to see the content of the documents used in this example, they are here:

This is the document provided as context for review, it’s a fictional design document used by Ingram Micro for Azure training.

Below is the report produced by Chris Junior.

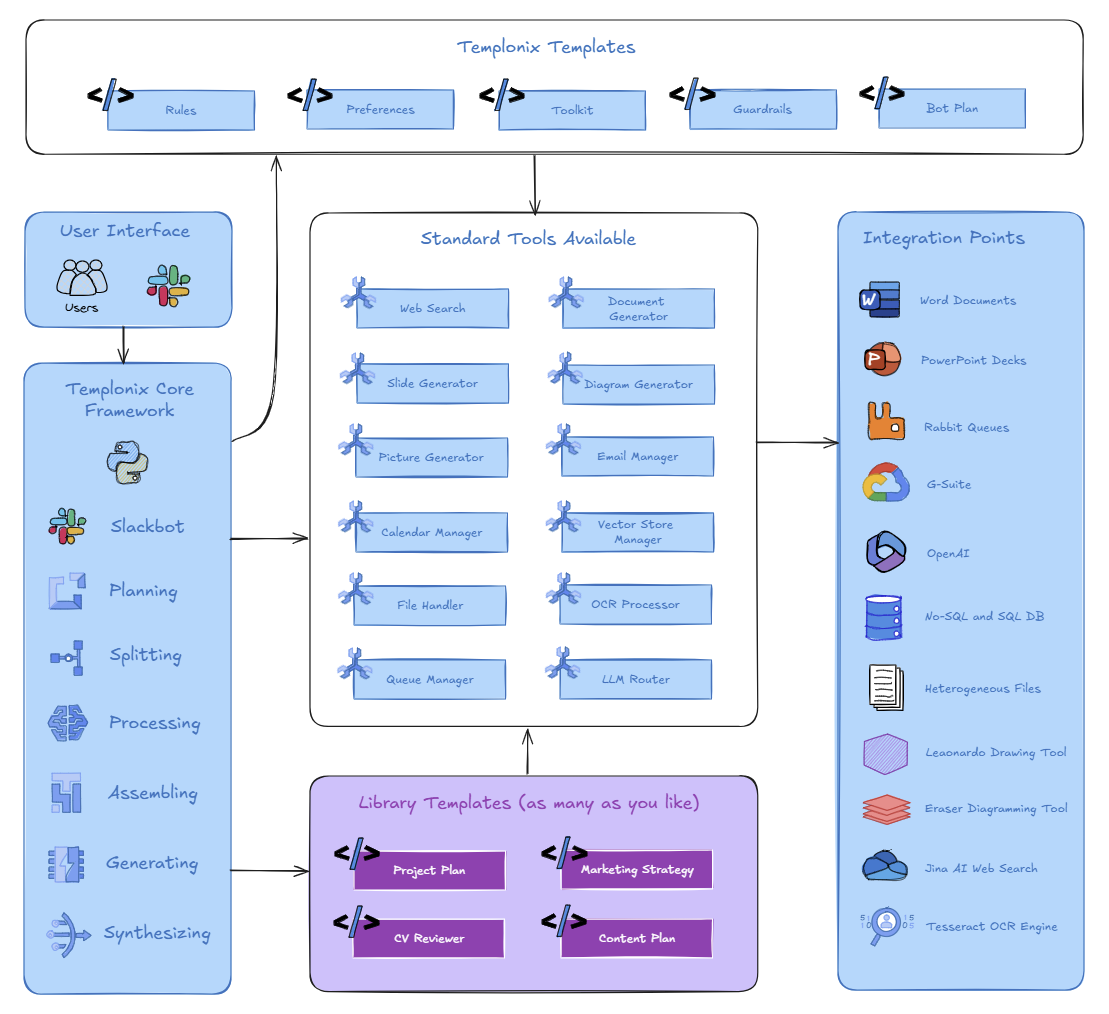

What Templonix Can Do Today

Since those early days of trying to solve my own technical validation problem, Templonix has grown into something rather special. The framework continues to operate through Slack, only now, it has Slash Command shortcuts integrated into it. Something else that also needs a whole article of its own!

As the diagram shows below, the framework keeps expanding with new tools, but I've kept the focus squarely on practical business outcomes. Whether you need technical documentation written up, design proposals analysed, or presentation materials created, it continues to behave like a real colleague rather than just another chat interface.

I’ve also expanded the template concept to include Library Templates. These are a bit like IKEA instruction manuals. Instead of telling you how to build a wardrobe, they tell the AI how to build a particular type of document, complete with formatting, diagrams and all the bits you'd expect from a human-made report.

The end result? Every time you ask for a certain type of document (say, a project plan) you get a consistently structured, professional document, but with unique content tailored to your specific needs and your use case. No more random AI responses or weirdly formatted documents - just proper business output every time.

The clever bit is that these instructions are written in a quasi-XML structure that I call Templonix as Code (TaC) which behave a bit like Infrastructure as Code, but for AI outputs instead of cloud compute resources. Since the Templonix core “speaks” XML, I can now build new features without ever touching the core of the framework, which is a great time saver and brilliant for prototyping and iterating through new ideas.

Here's what I’ve developed to date that are currently in the library that the agent can access any time.

Key Takeaways

I think there’s five that surface form this article.

✅ Beyond Chatbots

The distinction between AI chatbots and agents isn't just technical - it's about creating systems that can plan, execute, and validate complex workflows with degrees of autonomy.

✅ Tool Integration Matters

The power of an AI agent comes not from a single large language model, but from its ability to orchestrate multiple specialised tools effectively - from web searching to document generation.

✅ Enterprise Integration

Using existing enterprise platforms (like Slack) for AI agent interfaces can significantly reduce development time while improving adoption and usability.

✅ Structured Approaches Win

The use of structured formats (like XML) for both instructions and outputs provides consistency and reliability that free-form prompts can't match.

✅ Practical Impact

Real-world applications of AI agents don't need to solve grand challenges - sometimes the most valuable solutions address everyday professional bottlenecks, and this is where from a business perspective, you’ll find the real ROI.

These insights have shaped not just the development of Templonix, but my understanding of how AI can be practically applied in enterprise environments. The future of AI assistance isn't just about better models - it's about better integration with our existing workflows and tools. The more we understand this, the more value we can extract from this new and very powerful technology.

If you’re using Agents or planning to do soon, I'd love to hear about your what you’re doing and any challenges you’re running into. Drop a comment below or reach out directly - I read every response.

Until next time.

Chris