Beyond the AI Hype: The Critical Infrastructure You're Overlooking

While Everyone Chases GPT, This Forgotten Technology Is Quietly Solving AI's Biggest Problem

The Quick Take

One of the single biggest barriers to business AI adoption is the inability for off the shelf technology to integrate with other systems. Without a bridge for your shiny new AI technology to cross and reach the digital gold within your four walls, you’re just building more silos - things that most IT departments have spent decades trying to tear down!

To unlock the power of enterprise AI, companies need to realise that Agentic systems are the way forward - not Co-Pilots and Chatbots.

Here’s a prime example: ChatGPT can’t use a message queue, but an Agent can.

Learn in this piece how messaging sub-systems overcome many of the hurdles of governance and security, enabling seamless integration of AI Agents with protected company data.

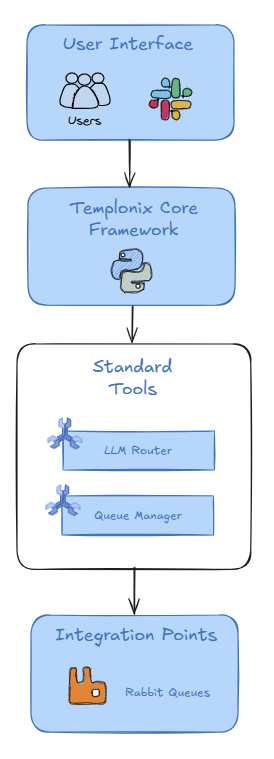

I’ll also show you how I’ve built this capability into my own Agentic framework, Templonix.

The Key to Unlocking Enterprise AI's Full Potential

Picture this: You've developed a brilliant AI Agent capable of revolutionising your company's operations. There's just one tiny problem – it's locked out of the very systems it needs to access.

Like a master locksmith standing before a vault without the combination, your AI is rendered useless by the very security measures designed to protect your enterprise data.

This scenario isn't just a hypothetical – it's a daily reality for many organisations attempting to implement AI solutions. The promise of artificial intelligence often crashes headlong into the brick wall of enterprise security and governance.

But what if there was a way to give your AI the keys to the kingdom without compromising your defenses?

The Enterprise AI Conundrum

Developing AI systems for enterprise use is no small feat. The challenges extend far beyond creating sophisticated algorithms or training robust models. The real stumbling block often comes when it's time to integrate these systems with existing enterprise infrastructure.

Traditional approaches to AI integration often involve direct API connections or database access. However, these methods can be fraught with security risks and governance nightmares. Granting an AI system unfettered access to sensitive company data is akin to giving a stranger the master key to your house – it might be convenient, but it's hardly prudent.

Moreover, the sheer complexity of enterprise systems means that even if security concerns could be addressed, the technical challenges of integration remain daunting. Different departments often use disparate systems, each with its own data formats, access protocols, and quirks. Trying to create a unified AI solution that can seamlessly interact with all these systems can quickly become a Herculean task.

Enter the Rabbit: A Paradigm Shift in AI Integration

The solution to this enterprise AI conundrum lies in a technology that's been hiding in plain sight: Message Queues. Specifically in my experience - RabbitMQ. This robust messaging system acts as a secure intermediary between an AI Agent and your protected enterprise data, revolutionising how we approach AI integration.

Below is a simple diagram of how I built this capability into my own framework.

As you can see, it’s really a very simple pattern. The Agent only needs access to the LLM Router (for access to its “brain”) followed by the Queue Manager tool. This is a specifically coded tool can write and read from a Rabbit Queue.

ZDNet wrote a while ago about how 90% of IT leaders say it's tough to integrate AI with other systems. When I built Templonix, this was one of a handful of design considers at the forefront of my mind.

Each task in a Templonix Agent generates a Correlation ID allowing the Agent, if needs to, establish and asynchronous communication as standard. In doing so, this little pattern of functionality immediately gives the Agent a route to enterprise system integration.

If you’re not familiar with message queues, think of them as highly efficient, secure postal services for your data. Instead of your AI Agent trying to break into various data silos directly, it simply drops its requests into a secure mailbox.

On the other side, authorised systems retrieve these requests, process them according to established security protocols, and return the results through the same secure channel.

Implementation: Bringing Theory into Practice

Let's have a quick look at how this looks in practice.

Imagine you're implementing an AI Agent designed to optimise inventory management across multiple warehouses. Without a queue, you'd need to create secure connections to each warehouse's inventory system, customer order database, and supplier management platform – a nightmare of integration and security challenges.

With the queue, the process becomes streamlined:

The AI Agent publishes inventory queries or update requests to a specific message queue.

Authorised services for each warehouse system subscribe to this queue.

These services process the requests, applying necessary security checks and data transformations.

Results are published back to a response queue that the AI Agent monitors.

A message queue isn't just a messaging system; it's the master key that unlocks the full potential of enterprise AI integration.

Key Takeaways

The journey of integrating AI into enterprise environments is fraught with challenges, but with tools like RabbitMQ, we're not just opening doors – we're building bridges.

This approach doesn't just solve today's integration problems; it lays the groundwork for a future where AI and enterprise systems work in harmony, driving innovation and efficiency across the board.

Let's distill the crucial insights from our exploration of a message queue in enterprise AI development:

✅ Security Without Sacrifice

A message queue allows AI Agents to interact with sensitive enterprise data without direct access, maintaining robust security while enabling powerful functionality.

✅ Integration Simplified

By using message queues, the complexity of integrating AI with diverse enterprise systems is dramatically reduced, accelerating development and deployment.

✅ Scalability Built-In

The queue architecture inherently supports growth, allowing your AI solutions to scale seamlessly as your business needs evolve.

If you're grappling with AI integration in your enterprise or planning to embark on this journey, I'd love to hear about your experiences and challenges.

Drop a comment below or reach out directly - I read every response.

Until next time,

Chris

Why is introducing message queues as an intermediary layer more secure than the agents connecting to the data stores directly? Or is your point that this reduces overhead because you only need a single secured connection per data store?