ESCARGOT: The AI Framework That Thinks on Its Feet

A no-nonsense look at why most AI frameworks crumble - and what to do about it.

Why do most AI frameworks crumble the moment they’re faced with the messy, unpredictable reality of the real world? It’s simple - they’ve got all the flexibility of a plank of wood. Sure, they’re accurate when everything’s going their way, but throw in a bit of chaos, and they fold like a cheap deckchair.

Today, I’m going to walk you through ESCARGOT - a framework that doesn’t just stand out from the crowd; it steamrolls over it.

ESCARGOT combines the reasoning chops of Large Language Models (LLMs) with the adaptability of dynamic knowledge graphs. But don’t worry - I’ll strip away the buzzwords and jargon and show you exactly what makes this framework tick and why it should matter to anyone serious about building AI systems that actually work in the real world.

The Problem With AI Frameworks Today

Let’s start with the basics. LLMs like GPT-4 are brilliant - until they’re not. They’re great at spitting out plausible-sounding answers, but their tendency to hallucinate (i.e., make stuff up) is a ticking time bomb for anyone relying on them in critical fields like healthcare or legal services. Because, let’s face it, no one wants their AI coming up with "creative" diagnoses or legal advice.

Some clever clogs thought they’d solved this with Retrieval-Augmented Generation (RAG) - you know, feeding the AI external data so it doesn’t go off on a tangent. But RAG has its own issues: token limits (that’s a killer unless you like writing lot of chunking code, which I don’t), imprecise vector searches, and a black-box reasoning process that’s about as transparent as mud.

Enter ESCARGOT, a framework that shows the rest of the AI world how it’s done.

A Fresh Take on Reasoning: Chain, Tree, and Graph

Now, let’s talk reasoning frameworks. Most of them are like those well-meaning government projects that look good on paper but fail spectacularly in practice.

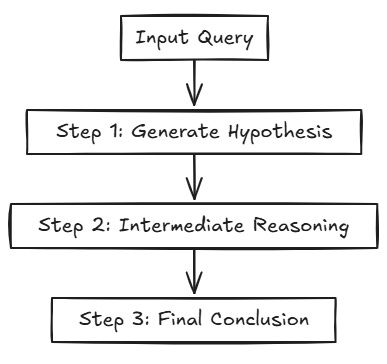

Chain-of-Thoughts (CoT): The Linear Thinker

CoT is your basic, no-frills reasoning framework. Step 1 leads to Step 2, which leads to Step 3, and before you know it, you’ve got an answer.

It’s fine for simple problems - like solving maths homework. But life isn’t a neat little sequence, is it? One wrong move early on, and the whole thing comes tumbling down like a game of Jenga.

No flexibility, no second chances. It does the job - until it doesn’t.

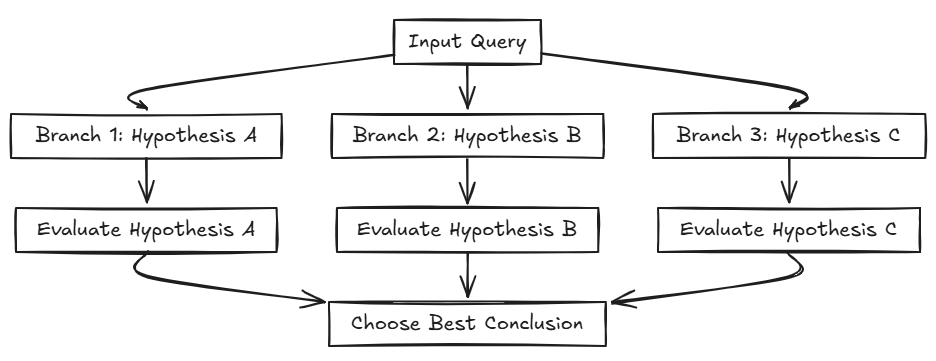

Tree-of-Thoughts (ToT): The Overthinker

Then there’s ToT, which tries to be clever by branching out into multiple paths, exploring different hypotheses. Sounds good in theory. But in practice? It’s about as lightweight as a fully loaded removal van.

Sure, it can backtrack and evaluate alternatives, but it’s slow, it’s clunky, and it’s stuck with whatever map it made at the start. If that map’s wrong? Well, tough luck. ToT isn’t changing its plans anytime soon.

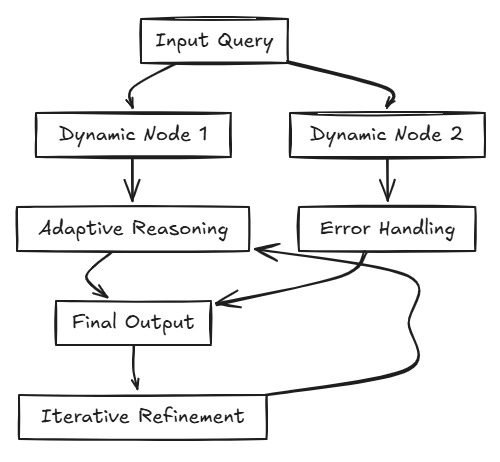

Graph-of-Thoughts (GoT): The Adaptable Pro

And now, we get to Graph-of-Thoughts (GoT). This isn’t just a better version of CoT and ToT - it’s a completely different league.

GoT is dynamic. It doesn’t just stick to a single path or get bogged down exploring every branch. It adapts. If a node fails or spits out nonsense, it fixes itself on the fly, like a mechanic working while the engine’s running.

Feedback loops? Built-in.

Self-debugging? Standard.

It’s the reasoning framework equivalent of a satnav that doesn’t just tell you where you went wrong but recalculates the entire route in real time.

Unlike the others, GoT isn’t just making educated guesses. It pulls in data from external knowledge graphs and databases, grounding its reasoning in facts, not fantasy. It’s like having a partner who’s not only quick on their feet but also knows their stuff. For complex, real-world problems, it’s an absolute game-changer.

Why ESCARGOT Gets It Right

So, where does ESCARGOT fit into all this?

It takes the GoT framework and turbocharges it.

ESCARGOT combines LLMs with a dynamic GoT that adapts, learns, and fixes itself as it goes. It even has the good sense to ground its reasoning in knowledge graphs, using structured, factual data instead of wandering off into hallucination territory like a drunk philosopher.

Here’s the kicker: ESCARGOT doesn’t just stop at being smart. It’s also resilient. If it hits a snag it doesn’t panic or fall apart. It self-corrects, adjusts, and carries on. And because it integrates directly with Python, it handles complex computational tasks with ease, making it ideal for high-stakes industries where getting it wrong isn’t an option.

The Bigger Picture: Tools or Collaborators?

ESCARGOT forces us to rethink how we view AI. Are these systems just glorified tools, or are they something closer to collaborators?

For me as a builder, these types of leaps in programming and development are massive because it keeps me on my toes and gives me opportunities to better my own software - so I look look at these more as collaborators.

Most traditional frameworks assume AI works best in predictable, static environments. ESCARGOT blows that assumption out of the water, showing that adaptability and resilience are just as important as accuracy.

Let’s not forget that AI systems mirror the principles we build into them. If we want them to handle the chaos of the real world, we need to build frameworks that are flexible, transparent, and robust. ESCARGOT isn’t just a technical marvel; it’s an ethical one too, proving that engineering elegance and accountability go hand in hand.

Key Takeaways from ESCARGOT’s Approach

Building an AI system that works in the real world isn’t just about fancy algorithms and theoretical brilliance - it’s about resilience, adaptability, and grounding in reality. Here’s what we can learn from ESCARGOT and how it applies to building AI systems that don’t crumble at the first sign of chaos.

Adaptability Beats Rigidity 🦎

AI frameworks that rely on static, linear reasoning (like Chain-of-Thought) are doomed the moment reality throws them a curveball. Real-world applications demand AI systems that can course-correct, revise their assumptions, and dynamically adjust their approach as new information emerges.

Facts Matter - And So Does Context 📚✅

Large Language Models (LLMs) have a well-documented problem: they hallucinate. Even when their responses sound convincing, they might be entirely incorrect. The key to reliability is ensuring that every AI response is grounded in structured, verifiable knowledge.

Explainability & Transparency Are Non-Negotiable 🔍⚖️

AI systems operating in a black box are not just frustrating - they’re dangerous. Users need to understand why an AI reached a particular conclusion, especially in fields like law, finance, and medicine where decisions have real consequences.

ESCARGOT provides clear transparency by structuring its reasoning process as an executable Python-based GoT. Each step in the reasoning process can be traced back, debugged, and audited - something that traditional black-box LLM outputs can’t offer. This means users can validate how a conclusion was reached rather than just accepting an AI-generated response at face value.

Resilience: AI Needs to Handle Errors Without Meltdowns 🛠️🔄

Many AI frameworks collapse the moment they encounter an unexpected scenario. That’s not good enough for real-world applications. AI systems need built-in self-correction mechanisms so they don’t just fail - they recover and learn.

ESCARGOT’s self-debugging mechanism ensures that if an error occurs during execution, the system doesn’t simply return a failure message - it analyses the issue, revises its code, and re-executes the faulty component.

This is critical for complex tasks, where errors can have cascading effects if left unchecked. If an AI system cannot self-correct, it cannot be relied upon for high-stakes decision-making.

AI Should Be a Partner, Not Just a Tool 🤝

There’s a difference between automation and augmentation. AI isn’t about replacing humans - it’s about enhancing them. The best AI systems don’t just execute tasks; they collaborate, problem-solve, and bring fresh insights to the table.

ESCARGOT’s approach forces us to rethink AI’s role. It doesn’t just retrieve data - it actively strategises, refines its methods, and executes its reasoning as an agent. It can generate multiple solution paths, evaluate them, and iterate until it finds the best answer.

For anyone developing AI applications, the future isn’t about creating rigid, rule-following bots - it’s about building AI collaborators that enhance human intelligence rather than replace it.

At the end of the day, ESCARGOT isn’t just another AI framework. It’s a blueprint for what AI should be - smart, adaptable, and grounded in reality. If you’re serious about building AI that works in the real world, this is where you start. If I hadn’t already built my own framework (Templonix) I would want to use this.

For a deeper dive into ESCARGOT, check out the full monty here: ESCARGOT GitHub Repository and the published research paper: Oxford Bioinformatics.

If you’re using Agents or planning to do soon, I'd love to hear about your what you’re doing and any challenges you’re running into.

Drop a comment below or reach out directly - I read every response.

Until next time.

Chris