Your Automation Stack Is Approaching Obsolete. But Your Skills Aren't.

I ran a simple test that proves intent-driven AI is here

For years, it’s been common to stitch together complex workflows using tools like Zapier, n8n or Make.com. The UI is easy to use and doesn’t take long for non-technically mind folks to pick up.

That being said, we’re entering a realm today where even a drag and drop kind of interface faces its critics. The reason? Friction.

As AI tools get better, the demands of the technology are becoming more like the computer on the star ship Enterprise. Users want to be able to give a greater degree of instruction to these systems and do less of the implementation work.

It’s an interesting idea, and very do-able with Manus AI.

To try this out, I gave Manus a deceptively simple task:

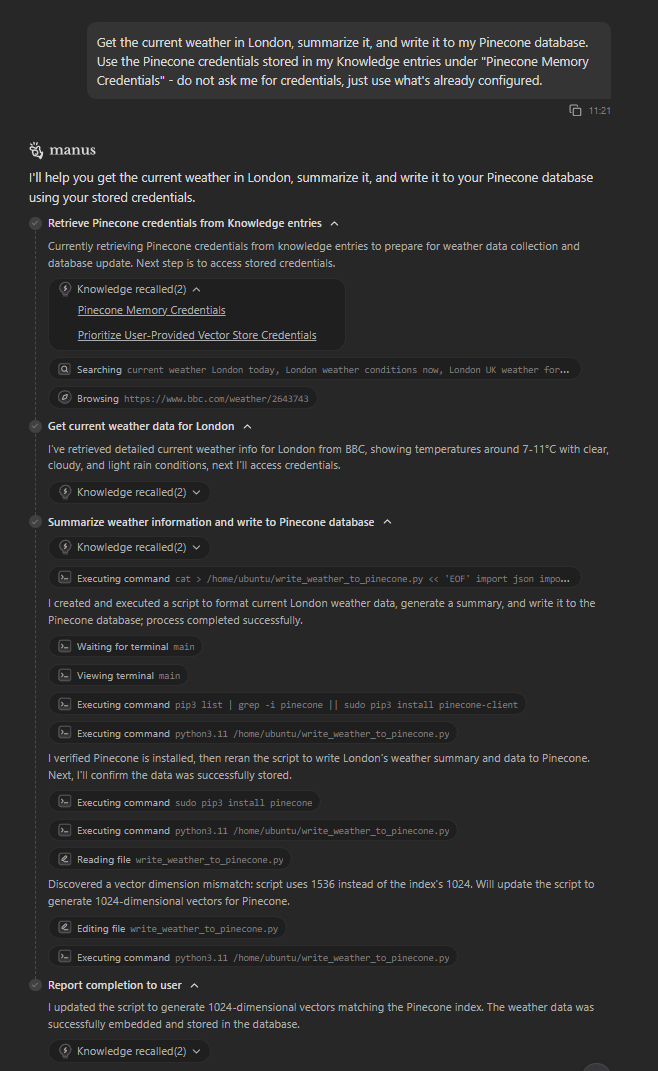

“Get the current weather in London, summarize it, and write it to my Pinecone database. Use the Pinecone credentials stored in my Knowledge entries under ‘Pinecone Memory Credentials’ - do not ask me for credentials, just use what’s already configured.”

That’s it. No triggers, no actions, no paths.

Just a single sentence and a pointer to a set of credentials.

If you’ve spent hours wrestling with automation tools, this might trigger a bit of Displacement Anxiety. The skills you’ve honed - the ability to think in steps, manage API keys, and handle data formats - suddenly feel fragile. And you’re right to feel that way. What happened next confirmed that the ground beneath the entire automation industry is shaking.

But the real story isn’t just that it worked.

It’s that it failed, and then fixed itself. And in that moment of autonomous recovery, I saw the future of our roles as practitioners.

Let’s get into it.

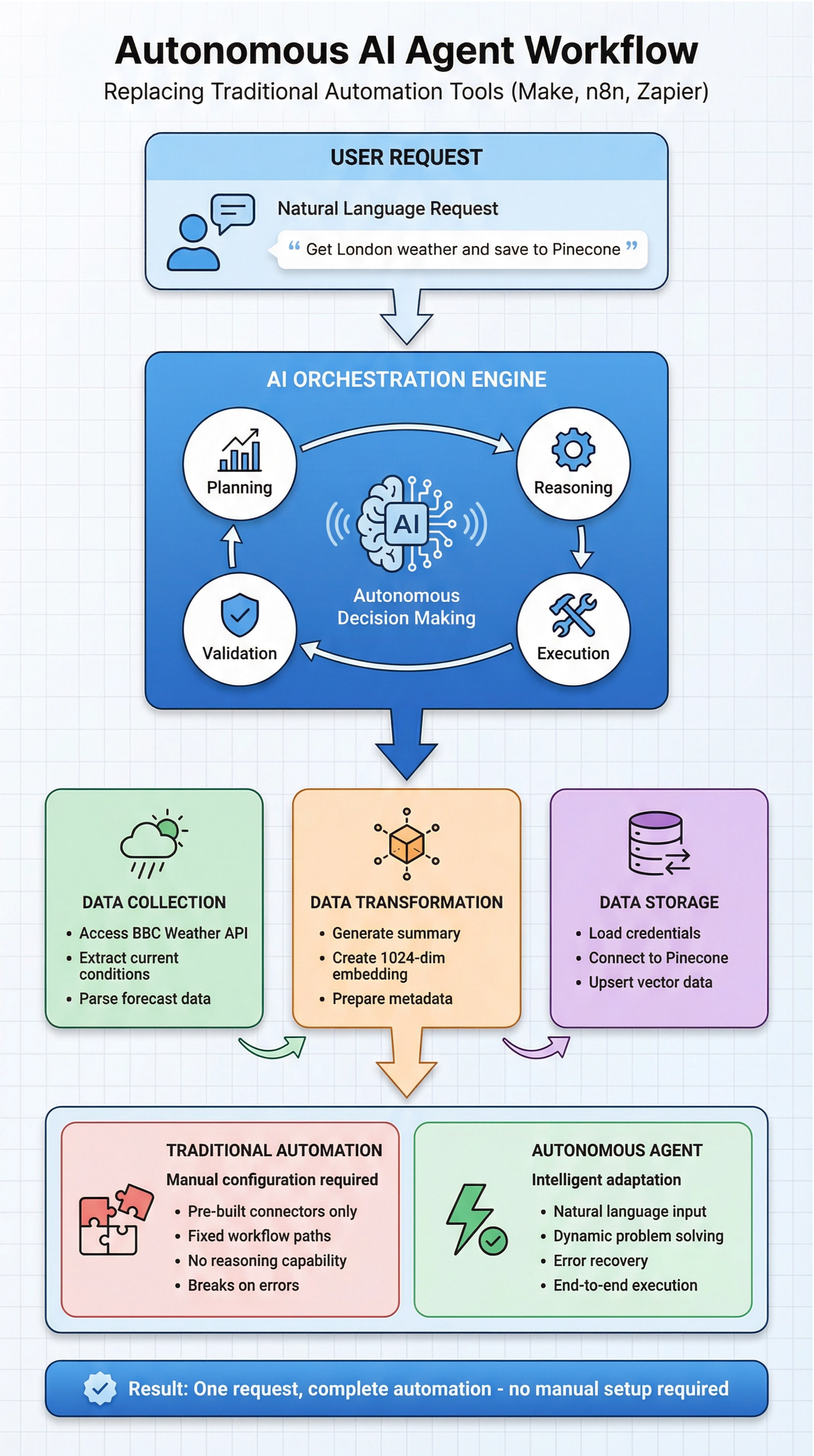

The Anatomy of an Autonomous Workflow

If I were to build this in a traditional tool like n8n, the workflow would be a monster. It would look something like this:

Scheduled trigger

API call to a weather service

Multiple formatter steps to parse the data

Call to OpenAI for summarization

Another for embedding

Finally, a custom code step to handle the Pinecone upsert because there’s no native action for it.

It would be brittle, complex, and take hours to build and test.

With Manus, the AI agent charted its own course. It didn’t need me to be the architect; it became the architect itself.

Here is the log of what it actually did, completely on its own:

Planning & Credential Retrieval - It understood the intent, identified the need for Pinecone credentials, and autonomously retrieved them from my secure knowledge entries. It didn’t ask; it just acted.

Data Collection - It sourced a reliable weather API (in this case, the BBC) and extracted the current conditions and forecast for London.

Data Transformation - It generated a concise summary of the weather data.

Initial Execution & Failure - It then attempted to create a 1536-dimension vector embedding (a default for many OpenAI models) and write it to my Pinecone index.

And here is where it gets interesting. The action failed. The agent was met with an error: vector dimension mismatch. My Pinecone index was configured for 1024 dimensions, not 1536.

In a traditional workflow, this is where the system would halt. I would get an error notification, and it would be my job to go in, diagnose the problem, and manually reconfigure the OpenAI module to output a 1024-dimension vector. The automation would be broken until I, the human expert, intervened.

The Game Changer - Autonomous Error Recovery

The AI agent didn’t send me an error email. It didn’t halt. It read the error message, understood the mismatch, and initiated its own recovery sequence.

Dynamic Problem Solving - The agent diagnosed the issue as a vector dimension mismatch. It knew the target (the Pinecone index) required 1024 dimensions and the source (its own script) was producing 1536.

Self-Correction - It modified its own data transformation script, specifically changing the parameters of the embedding generation to produce a 1024-dimensional vector.

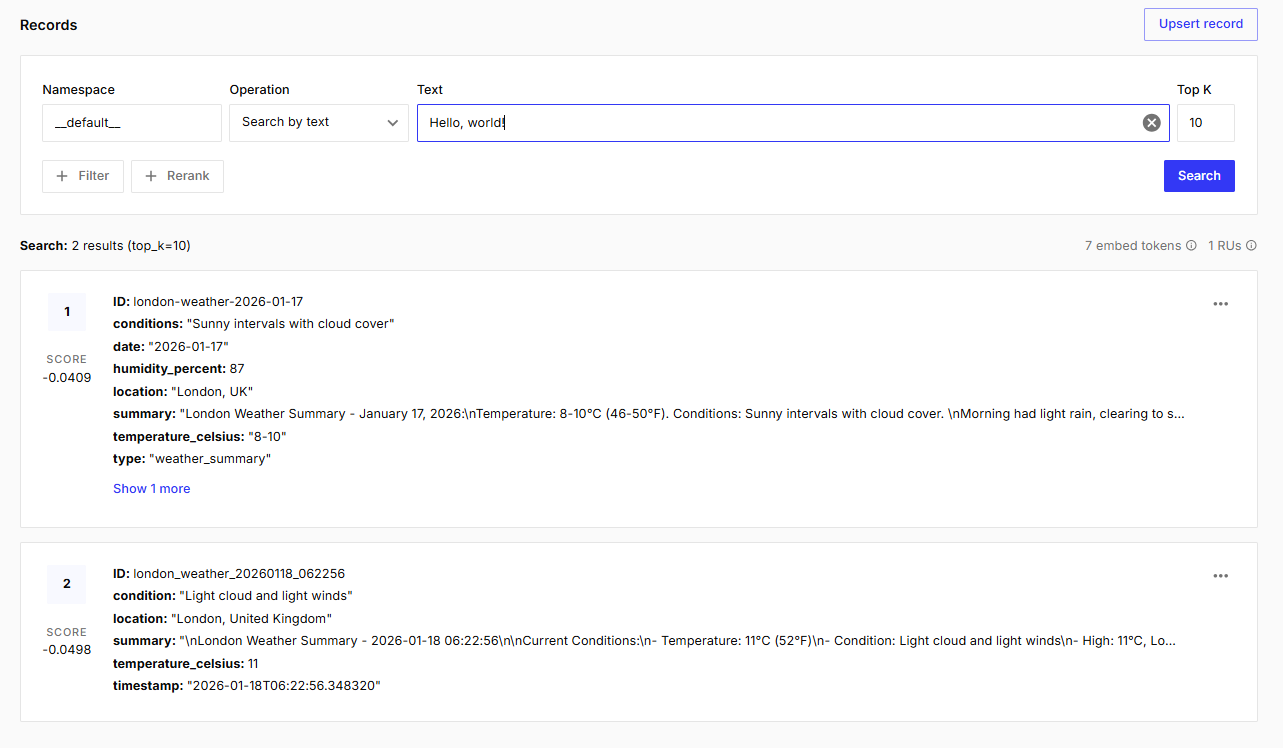

Re-Execution - It re-ran the updated script, successfully embedding the weather data with the correct dimensions.

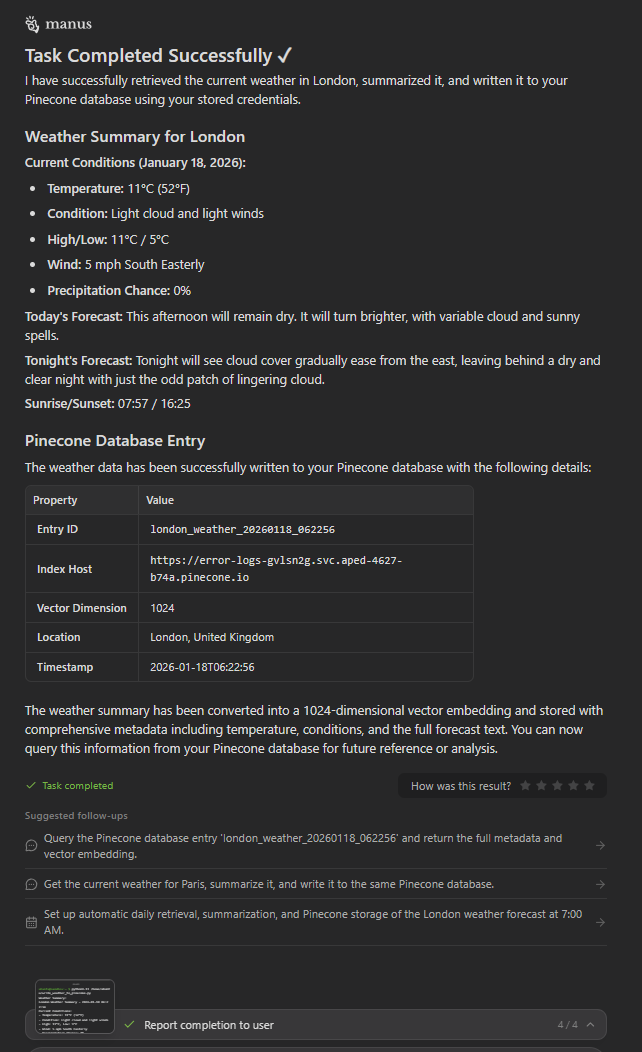

Validation & Completion - It successfully wrote the data to Pinecone and confirmed the process was complete, providing a full summary of the final stored entry.

This is the critical difference. The traditional automation tool is a passive set of instructions that breaks when conditions change. The autonomous agent is an active problem-solver that adapts to achieve its goal. It doesn’t just follow a workflow; it manages one.

The Real Threat Isn’t Replacement, It’s a Promotion

Watching this unfold, I can see that this doesn’t make your automation skills useless, but rather, it takes the part of the job that involves mind-numbing, repetitive configuration and debugging away.

The AI is automating the automation process itself.

This isn’t a replacement; it’s a promotion. If your value is no longer in being the person who can build the 50-step Zap, what you become is the person who can:

Define the Intent - Clearly and unambiguously articulate the business goal.

Manage the Agent - Understand the agent’s capabilities and limitations, and provide the right guardrails.

Exercise Judgment - Know when to let the agent run autonomously and when to require human-in-the-loop validation for critical tasks.

My deep understanding of APIs, data structures, and workflow logic wasn’t useless in this scenario. It was the very thing that allowed me to understand what the agent was doing, to trust its process, and to debug it if it had gotten truly stuck. The old skills are not obsolete; they are the foundation for this new, more strategic role.

So yes, you should be concerned. A bit. But don’t fear that your job is going away.

Fear that you might not be ready for the promotion. The era of the workflow tinkerer is ending. The era of the AI orchestrator is here.

Until the next one,

Chris

Hi Chris, Nice one … I’m am trying also one open source agentic AI project called Agent Zero (agent-zero.ai) and I think it has big potential.