Two conversations this last week reminded me why 95% of enterprise AI projects fail to scale beyond pilot programs.

It started with a Zoom call with a fintech startup whose CTO spent twenty minutes explaining their “revolutionary AI agent” for loan processing.

When I asked him to walk through a single application, it became clear they’d built a sophisticated document parser that occasionally asked ChatGPT to rewrite rejection letters in friendlier language.

Useful? Absolutely.

An agent? Not even close.

Wednesday’s meeting was with a logistics company whose innovation director proudly showed me their “autonomous shipment processing system.” After digging deeper, I discovered their “AI transformation” was actually a traditional workflow that routed angry customers to human support and sent standard responses to routine inquiries - they’d added an LLM call for sentiment analysis and suddenly believed they were on the cutting edge of artificial intelligence.

Both companies had the same problem: they were solving real business challenges with perfectly adequate technology, but they’d convinced themselves they were building something they weren’t.

More importantly, both were about to make scaling decisions based on fundamental misunderstandings about what they’d actually built.

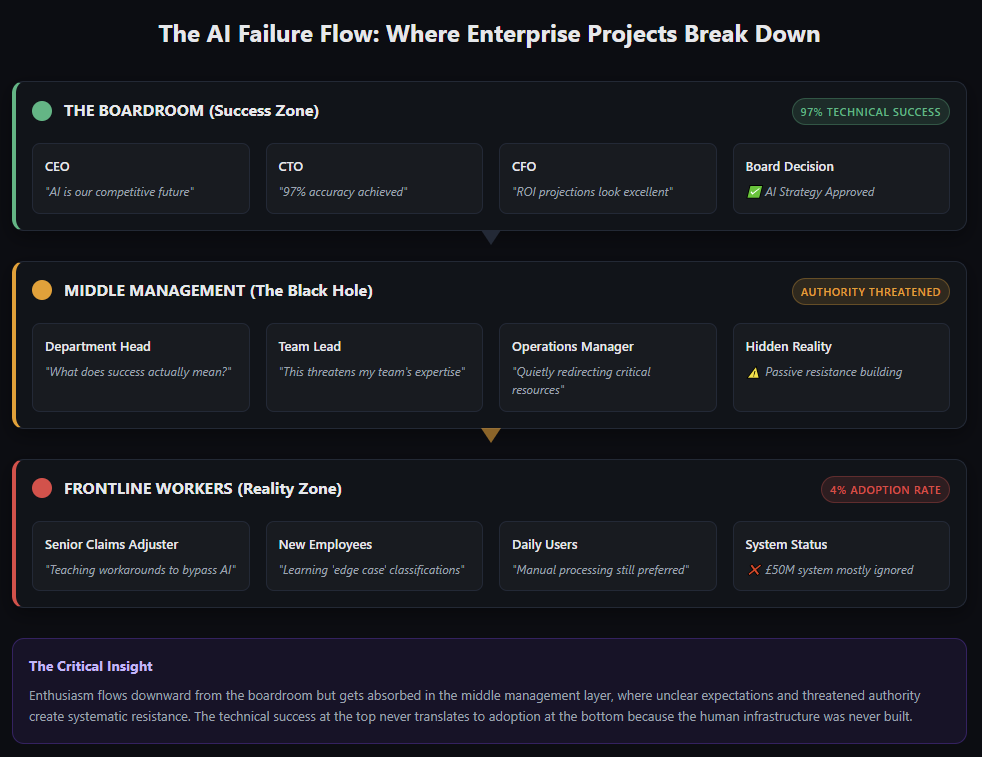

This pattern isn’t unique to my week. MIT’s research confirms what I see repeatedly - the failure has nothing to do with algorithms, cloud architecture, or model accuracy.

It’s about the humans everyone forgot to consider.

Let’s get into why this is a killer for your project if you don’t give it due care and attention.

The Invisible Resistance Network

Consider this fictional (yet very plausible) scenario.

Your company spends eighteen months building an AI system that can process insurance claims in minutes instead of days.

The accuracy beats human performance by 23%.

The board is thrilled.

A big bonus is on the cards.

Then you discover that senior claims adjusters are finding creative ways to bypass the system entirely.

They’re categorising complex claims as “edge cases” requiring manual review. They’re coaching younger staff on which keywords trigger the AI’s uncertainty responses. They’re systematically undermining your technical masterpiece.

Why?

Because nobody asked them what they thought about being replaced by a machine.

This isn’t sabotage - it’s survival instinct. And it’s happening in organisation right now, whether the project sponsors know about it, or not.

The Three Patterns That Predict Failure

Over the last nine months or so, it’s become incredibly easy to spot recurring failure patterns that show up with startling consistency.

I’ve started to characterise them like this.

The Executive Enthusiasm Gap

The C-suite loves AI in theory but can’t agree on what success looks like in practice. This is largely down to the fact that most of the folks at the top are consumed with marking sure they are being “seen doing AI” without any real thought for what it means.

One executive measures ROI - or at least, tries to.

Another tracks user adoption. A third focuses on competitive advantage.

Without aligned expectations, every outcome looks like failure to someone important.

The Middle Management Black Hole

AI strategy gets created in boardrooms and deployed to frontline workers, but it dies in the middle management layer.

These are the people who actually run day-to-day operations, and they’re usually the last to be consulted and the first to lose authority when AI systems arrive.

The Skills Anxiety Spiral

Employees hear “AI transformation” and immediately start updating their LinkedIn profiles.

The more talented ones leave before the system even launches.

The ones who stay become passive-aggressive obstacles to adoption.

Sound familiar?

What the Winners Do Differently

The 5% of companies that actually succeed with AI follow a completely different playbook. They treat AI implementation like an organisational psychology challenge, not a technology project.

From what I’ve observed, the successful ones share several common approaches.

Executive Alignment That Goes Beyond Enthusiasm

The companies that avoid failure create formal mechanisms to ensure leadership actually agrees on what success looks like.

This isn’t just a kickoff meeting where everyone nods—it’s documented expectations, regular check-ins, and clear accountability for when things go sideways.

Trust-Building Over Training

The organisations that achieve high adoption rates focus on addressing skepticism rather than just teaching people how to use the technology.

They identify respected employees to serve as advocates, they’re transparent about how the AI makes decisions, and they design systems to enhance human judgment rather than replace it.

Proactive Resistance Management

The successful implementations systematically identify where resistance is likely to emerge and address concerns before they become roadblocks.

They understand that middle managers and frontline workers have legitimate fears about job security and professional relevance.

Thus, the secret isn’t better technology, it’s better psychology.

The Diagnostic Question

Here’s how you can tell if your organisation is headed for AI failure.

If your most influential middle managers had serious concerns about your AI implementation, would you know about it before or after they’d convinced their teams to resist?

If the answer is “after,” you’re already in trouble.

AI transformation doesn’t fail in boardrooms where everyone nods enthusiastically about digital futures.

It fails in break rooms where senior employees whisper about job security, and in department meetings where middle managers quietly redirect resources away from AI-enabled processes.

The technical challenges of AI are actually the easy part.

The organisational challenges—building trust, aligning incentives, managing the human side of change—that’s where most initiatives collapse.

The Real Success Framework

The companies that crack this code understand that AI implementation requires a systematic approach to what I call “change psychology.” They invest as much effort in adoption dynamics as algorithm optimisation.

This means mapping informal influence networks before deployment, identifying potential resistance sources and addressing concerns proactively, and creating peer advocacy systems that make AI adoption feel collaborative rather than imposed.

It means designing measurement systems around business outcomes from day one, not technical performance metrics that nobody in the business actually cares about.

Most importantly, it means recognising that the sophisticated technology is worthless if the humans who are supposed to use it find ways to work around it instead.

The complete methodology for building this human infrastructure involves systematic approaches for creating executive alignment, developing change champion networks, managing the five predictable resistance patterns, and measuring human adoption alongside technical implementation.

Most crucially, it shows you how to identify and fix the organisational gaps that turn technically perfect AI systems into expensive failures, before you’ve spent millions learning this lesson the hard way.

Until the next one,

Chris

The detailed methodology I’ve developed reveals exactly how the 5% of successful organisations build this human infrastructure. It includes systematic approaches for executive alignment, change champion networks, the five resistance patterns, and adoption measurement systems that actually predict success.

Ready to see the complete framework that prevents AI implementation failure?“