Is Personal AI Infrastructure the Future? Why Ownership Beats Rental

Reduce SaaS dependency and gain the AI productivity you've been promised

The AI industry has a dirty secret.

Whilst everyone's obsessing over building complex autonomous agents that promise to revolutionise everything, most people are stuck in a frustrating middle ground.

You've probably felt this gap yourself.

You want AI that can actually do things—send emails, create calendar events, remember important context across conversations—but you're not interested in becoming an AI infrastructure specialist.

You don't want to manage vector databases or oversee autonomous systems that might make expensive mistakes. You just want intelligence that works within the tools you already use. And you’re not happy with the escalation in monthly fees that are becoming more common with automation vendors.

This disconnect between what the industry builds and what people actually need isn't just frustrating—it's getting expensive.

Meanwhile, the promise of AI productivity remains largely theoretical because the solutions are either too basic or too complex for real-world professional use.

I’ve come up with my own answer to this problem.

Let’s get into what it is.

The Professional Intelligence Gap

Recent conversations with working professionals reveal a consistent pattern: they don't want to learn about vector stores, memory systems, or reasoning frameworks. They want to use tools that simply work.

As one computer scientist put it perfectly: "Everyone's talking about attempting to build agents, but they're not using context, they're not using system-level thinking—they're building expensive automations wrapped in marketing hype."

This is the core problem with today's AI landscape. On one side, you have basic conversational AI that can take limited action.

On the other, you have complex agent systems that require significant infrastructure investment and ongoing management. There's almost nothing in between that gives you the intelligence you need with the control you want.

The result?

Most people are paying premium prices for fragmented solutions. Zapier for basic automation, Microsoft Copilot for AI assistance, various point solutions for specific needs—all running in different clouds, with different pricing models, storing your data on servers you don't control.

What Professional Intelligence Actually Looks Like

As I wrote about some time ago, I’ve been spending a lot of time looking into the options for simplifying this situation via a concept I refer to as Professional Intelligence Systems. In short, this is the means to maximise the use of what we already have.

My view is that the solution isn't more complex AI or agents—it's taking advantage of the infrastructure you already have.

Instead of renting AI capabilities from multiple vendors, you can build on the platform you're likely already using whilst minimising additional dependencies.

My answer to this has been to create Templonix Lite - An Model Context Protocol (MCP) enabled version of the agentic framework I use in my day job.

The approach is simple - A free, open-source personal AI infrastructure that runs locally on your machine. This gives you the intelligence capabilities you need from the Claude Desktop application whilst maintaining complete control over your tools and costs.

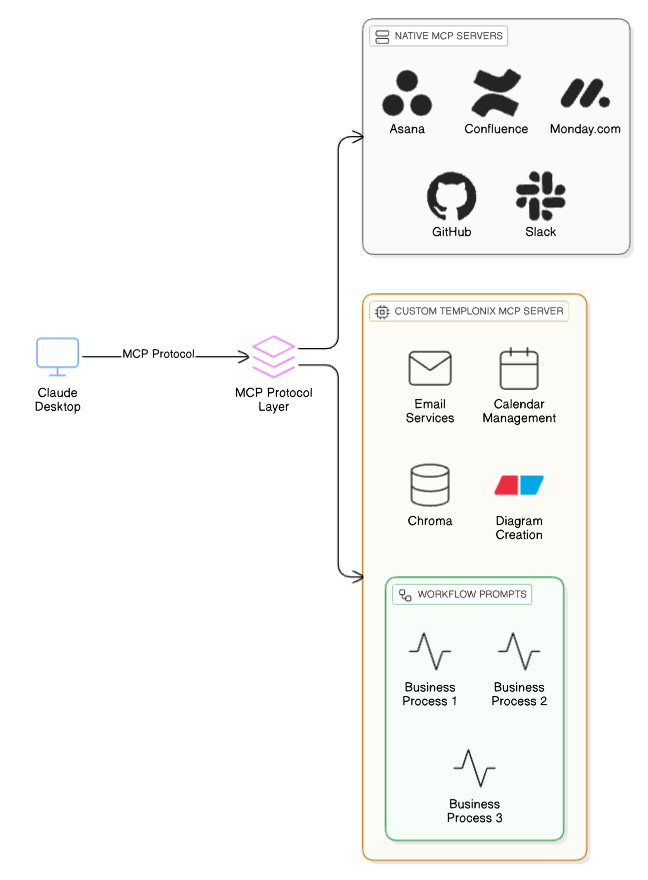

At a high level, the solution looks like this.

The reasoning layer is Claude.

SaaS systems you might use can be accessed if you wish.

Templonix Lite contains a set of tools and prompt driven workflows that are exposed to Claude.

Real Control vs Cloud Dependency

Here's what becomes possible when you own your AI infrastructure instead of renting it.

True Calendar and Email Control

Unlike cloud services that can only read your calendar and email, Templonix Lite can actually create events, send invites, and automate communications through direct API integration.

No monthly fees for basic functionality that should work by default.

Google already supplies the mechanism to achieve this through their online console. Systems such as Zapier and Make simply abstract these via their cloud offer. The question is - are the fees worth it?

In this short video, you’ll see me testing the Calendar feature by asking Claude to add a series of calendar invites to my schedule for next week based on the needs of a fictional project that has a Friday deadline.

All at no cost.

Persistent Local Memory

Both Claude and ChatGPT now offer cross-chat memory, but local memory provides several critical advantages that cloud-based solutions cannot match. Your conversations and insights are stored using local ChromaDB storage that integrates with Claude Desktop, giving you:

True Permanence

Cloud providers can reset, limit, or monetise memory features at any time. Your local memory persists regardless of vendor policy changes, subscription tiers, or platform switches.

Professional Data Security

Store sensitive business insights, client details, and strategic thinking without concerns about vendor data policies or potential security breaches. Information like "Client X is price-sensitive, focus on ROI metrics" or "Competitor Y struggles with enterprise sales cycles" stays entirely under your control.

Unlimited Growth

No token limits, storage caps, or premium feature gates. Your memory becomes more valuable over time without vendor restrictions on how much context you can maintain or search.

Direct Access

Query your memory database independently of AI conversations. Search for specific client interactions, project insights, or strategic notes without relying on conversational interfaces or vendor search limitations.

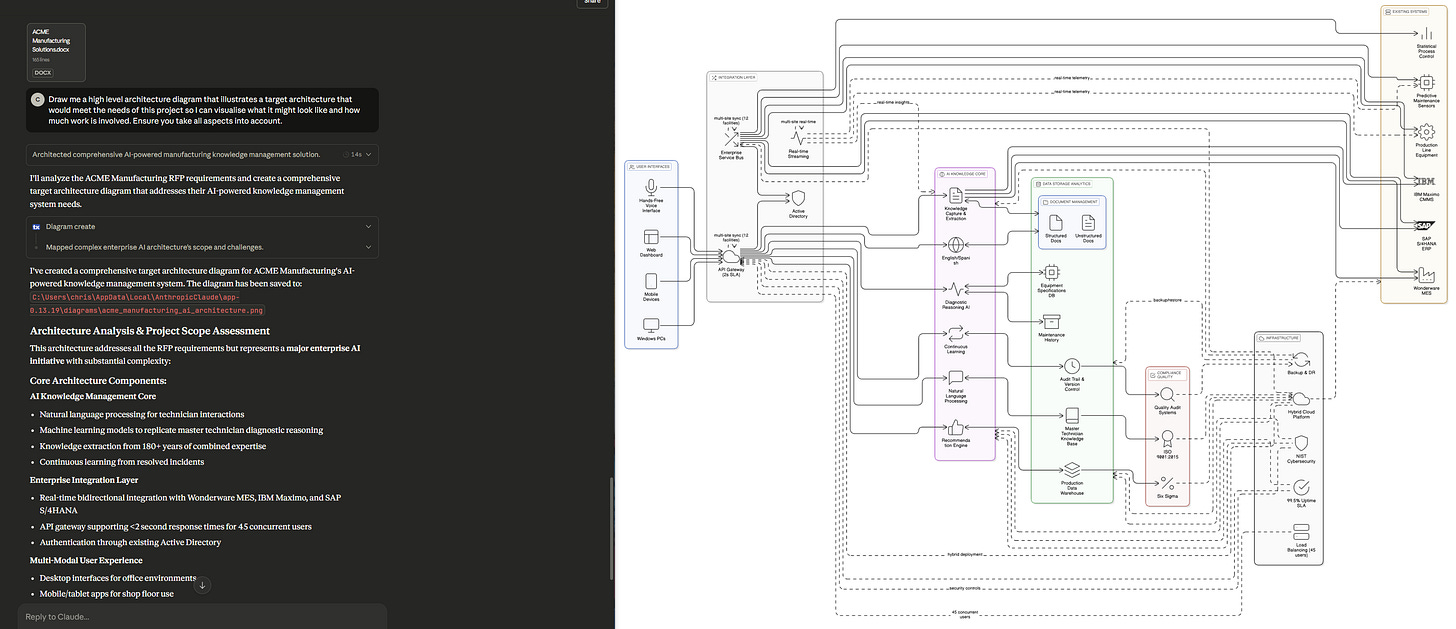

Complex Diagram Generation

I’m a big fan of Eraser.io for publication-quality diagrams on demand. Ask for a flowchart of your morning routine or a system architecture diagram, and get professional results you can actually use in presentations.

Since the API is designed to produce diagrams and save them to the local file system, this is ideal for working with Claude Desktop.

Workflow Specialisation

Perhaps most importantly, and thanks to how MCP works, the most significant leap for me is the ability to expose Meta Prompts directly to Claude Desktop, removing the step of needing to either create a Claude Project or copy and paste the prompt into the chat.

All we do now is create and test our prompts, then add them to the right Templonix Lite folder and they’re immediately available via the Workflow tools. Simply ask Claude what prompts he has, and run them.

This workflow system transforms standard AI into domain-specific expertise with very flow friction for the user.

Need sales negotiation strategies? Load the sales workflow.

Require technical architecture guidance? Activate the systems design workflow.

This provides the specialisation of expensive consulting tools whilst keeping your intellectual property local.

Why Infrastructure Ownership Changes Everything

The difference between renting AI capabilities and owning AI infrastructure goes beyond cost savings—it's about strategic control.

When you depend on cloud services for core productivity functions, you're building critical business processes on rented land where the landlords can change the rules at any time.

Privacy by Design

This is a tough topic to do justice. The modern AI world is fraught with concerns and regulations and in many countries, compliance is a barrier to adoption. While no solution is perfect and we must all be mindful of how we use AI when it comes to privacy, I believe localizing the use of AI is a step in the the right direction.

No Subscription Escalation

Cloud AI services start affordable but costs escalate quickly as you need more capabilities. Microsoft Copilot's meaningful features require enterprise licensing. Zapier's useful automation tiers cost £50+ per month per user. These subscription costs compound across team members and tool categories.

Customisation Freedom

When you own the infrastructure, you can modify and extend capabilities according to your needs rather than waiting for vendor roadmaps. Integration with existing systems follows your priorities, not a SaaS provider's development schedule.

The Technical Reality (Without the Complexity)

Achieving professional-grade capabilities through local infrastructure is surprisingly straightforward to deploy. Under the hood, it's an MCP (Model Context Protocol) server that integrates seamlessly with Claude Desktop, but you don't need to understand the technical details to benefit from it.

The system uses:

Local ChromaDB for persistent memory storage on your machine

Direct API integration with Google Calendar and Gmail using your credentials

Eraser.io integration for professional diagram generation

Modular workflow system that's extensible as your needs evolve

Installation takes about 30 minutes, and once configured, it simply extends Claude Desktop's native capabilities with the write permissions and persistent memory that should have been included from the start.

Who This Actually Serves

While this is a tough question to answer because we all use AI differently, I see a few different users who’d benefit from localised AI capabilities.

Individual Professionals

Transform Claude into a true personal assistant that manages your schedule, drafts and sends emails, creates professional diagrams, and maintains context about your projects and preferences—all without monthly subscription fees.

Small Business Owners

Automate routine communications, visualise business processes, and maintain customer context without paying enterprise automation platform fees or accepting vendor data policies.

Content Creators

Generate professional diagrams for content, automate outreach workflows, and build a persistent knowledge base about your audience and projects that improves over time.

Anyone Frustrated with Cloud Costs

Eliminate per-user monthly fees for basic AI productivity whilst gaining capabilities that most cloud services don't provide.

The Bigger Picture: AI Infrastructure Independence

What I’ve chosen to build demonstrates a different philosophy about AI adoption.

Instead of pushing everything to cloud services that charge monthly fees and control your data, we can build powerful AI infrastructure that runs locally and gives users complete ownership.

This isn't about avoiding cloud services entirely, it's about choosing when and how to use external services rather than being forced into vendor dependencies for basic productivity functions.

The approach proves that sophisticated AI automation doesn't require complex enterprise agent systems or expensive cloud subscriptions. You can have professional-grade AI intelligence that works within familiar interfaces whilst maintaining complete control over your data and costs.

What's Coming

I'm releasing Templonix Lite as free, open-source in the coming weeks. The system is designed to be extensible, so the community can add new tools and workflows over time. It will launch with the tools highlighted in this post, with a backlog of others already in the works.

If we’re going to get the most out of this new technology and dispense with all the hype, the future of AI productivity tools should be in the hands of users as much as possible, not locked behind cloud services that can change their terms or pricing at any time. Templonix Lite proves you can have sophisticated AI automation without sacrificing features or paying monthly fees.

Until next time,

Chris

I run powerful models on my MacBook Pro. I am now running Qwen 3 next 80B. I have 128GB of RAM. the Mac M architecture is the easiest way for a consumer to run good AI locally. This new Qwen model replaces GLM 4.1 Air which was incredible, but the Qwen is much faster and much smarter.

We're blessed with these open source Chinese models that sit on your home machine, not calling home, just for you to work with.

You can also anonymously set up accounts on some AI platforms with Monero and if you don't tell them who you are in the prompts, you can have quite private AI.

Who needs Zapier, when you can have an MCP of your own for FREE!!!