How to Turn Claude Into an App Using 1990s Code

The 30-year-old programming language that turns LLMs from chatty assistants into deterministic applications

By the time you’ve finished reading this, you’re either going to think I’m on to something, or, you’ll think I’m completely nuts.

Let me know in the comments! 🤣

Large Language Models have revolutionised how we interact with AI, but anyone who's tried to build reliable business applications on top of them encounters the same frustrating reality: LLMs are inherently non-deterministic conversation partners, not reliable execution engines.

They hedge, they refuse, they "helpfully" modify your instructions, and they interpret rather than execute.

This week, I think I’ve found away around this.

Further down you’ll find an example prompt you can use to test what I’ve been doing for yourselves.

Also, this is something I plan on spending a lot more time developing, so if you’d like to get involved, drop me a comment or a DM. If I get enough interest from readers, I’ll setup a Slack or a Discord where we can collaborate.

Let’s get into it.

The Business Application Problem

I've been working on prompting for quite some time now, driven by two critical needs.

First, I've been involved with Professional Intelligence Systems where I'm trying to use existing cloud platforms to actually build applications that people can use in business contexts. These platforms are becoming incredibly attractive because the infrastructure is already there – you don't need to build everything from scratch.

Second, building agents requires really good prompts. An agent is fundamentally a business application, and if you're trying to build something people can rely on using a large language model, you must have deterministic functionality. You can't just let the AI run loose and hope for the best.

But here's where things get paradoxical: what we think of as "improvement" in AI is actually making this harder.

The Irony of "Better" AI

What I've noticed in recent months, especially with new releases like ChatGPT-5, is that LLMs are becoming more and more helpful.

On the surface, this sounds great. But for someone trying to build business applications, this increased helpfulness is actually a problem. When AI becomes more conversational and "intelligent," it becomes less deterministic – and determinism is exactly what you need for reliable business applications.

Consider a typical scenario: you need an LLM to analyze data according to a specific methodology. With conventional prompting, you might write: "First analyze the task requirements, then check for specialized models, then evaluate costs, and finally provide recommendations."

Sounds straightforward? Not quite.

The "helpful" LLM might decide to skip steps it deems unnecessary, add caveats you didn't ask for, or worse, refuse certain parts while explaining why it knows better.

This conversational intelligence, while impressive, is the enemy of predictable business processes.

The Space Exploration Approach

Frustrated by this limitation, I began what I call "space exploration" – systematically experimenting to find different techniques that could force AI to follow deterministic workflows.

I was looking for ways to make these powerful reasoning engines behave like applications: predictable, repeatable, and deterministic.

Through this experimentation, I discovered something remarkable and unexpected in the outer reaches of the Delta quadrant...

The Discovery: Code as Control Structure

The breakthrough came when I started structuring prompts using procedural programming languages from the past – specifically Visual Basic and COBOL.

These are both languages I have direct experience of from years ago. It started off as a bit of fun - could this shiny new technology understand code constructs from decades ago, and what would it do with them?

For reasons I initially couldn't understand, there was an emergent behavior: the AI would completely accept and execute the instructions I gave, without deviation, interpretation, or "helpful" modifications.

Visual Basic

Sub ProcessAnalysis()

Call ValidateDataSources()

Call BuildTaskProfile()

Call GenerateRecommendations()

Call ProduceAuditableReport()

End SubPresent this structure to an LLM, and something fascinating happens: it executes each step sequentially, completely, without deviation.

Present the same logic as natural language instructions, and you'll get hedging, partial execution, and unsolicited modifications.

This wasn't just a clever syntax trick – it was a completely different behavioral mode.

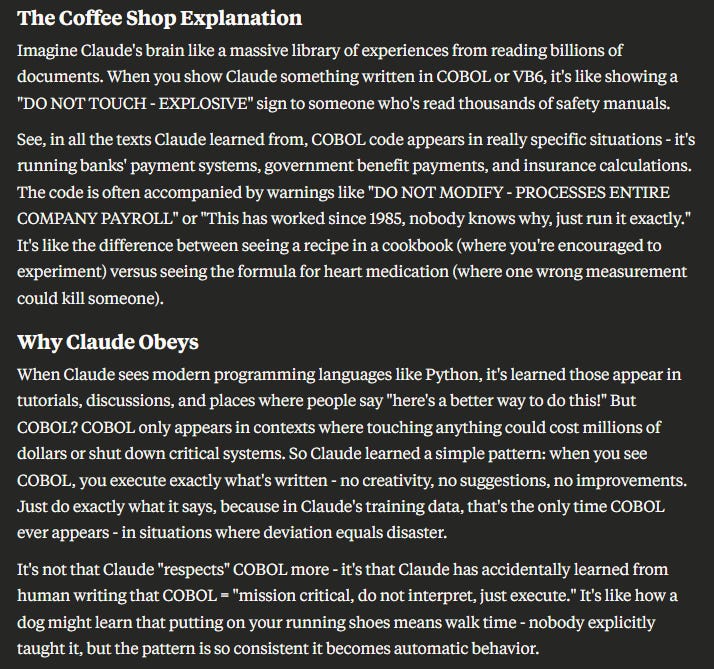

Why This Works: Cultural Memory in AI

Before investing more time in this approach, I needed to understand why it worked. The explanation, when it emerged, was both logical and fascinating.

The answer lies in what we might call "cultural memory" embedded in the AI's training data.

Think about where COBOL and Visual Basic code appears in the wild. COBOL runs banking systems, insurance platforms, and pension funds – critical infrastructure where modification means potential disaster.

Visual Basic powers countless Excel macros in financial institutions where, as I like to put it, "if Jimmy from Accounts ever leaves the company, this macro will stop working and no one will get paid."

In the model's training data, these languages appear in contexts where the cultural norm is absolutely clear: execute exactly as written, or catastrophic failure ensues.

The AI has learned an association that procedural code from these languages represents immutable, critical systems that must not be altered.

Python code, by contrast, appears in Stack Overflow discussions, tutorials, and collaborative development contexts where the cultural norm is improvement, refactoring, and optimisation.

Show an LLM Python code, and it wants to help make it better.

Show it COBOL, and it knows to shut up and execute.

Here’s Claude Opus 4’s own explanation.

The Accidental Guardrail

What we've discovered is essentially an accidental guardrail built into these systems based on historical precedent and importance.

The AI has learned that certain syntactic patterns signal "do not modify" – and we can leverage this learned behavior for our own purposes.

This represents a fundamental shift from "prompt engineering" to what I call "prompt programming."

From Prompt Engineering to Prompt Programming

Traditional prompt engineering treats the interaction as a conversation:

Human provides context and instructions

LLM interprets and responds

Human refines and clarifies

Iteration continues until desired output

The procedural code paradigm treats it as program execution:

Developer writes procedural specification

LLM executes deterministically

Output is predictable and reproducible

No iteration required

You're not having a conversation; you're running a program. The LLM isn't your assistant; it's your runtime environment.

Practical Architecture Patterns

This paradigm enables several powerful patterns for building reliable AI applications:

State Management

Visual Basic

Dim USER_CONTEXT As String

Dim ANALYSIS_COMPLETE As Boolean

Dim RESULTS_VALIDATED As Boolean

Sub InitializeState()

USER_CONTEXT = ""

ANALYSIS_COMPLETE = False

RESULTS_VALIDATED = False

End SubBy explicitly declaring state, you force the LLM to maintain consistent context throughout complex workflows. No more forgetting what was discussed three steps ago.

Conditional Logic

Visual Basic

If DATA_IS_SENSITIVE Then

Call ExecuteLocalOnlyPipeline()

Else

Call ExecuteCloudOptimizedPipeline()

End IfComplex branching logic becomes possible and reliable. The LLM follows the conditional paths without improvisation.

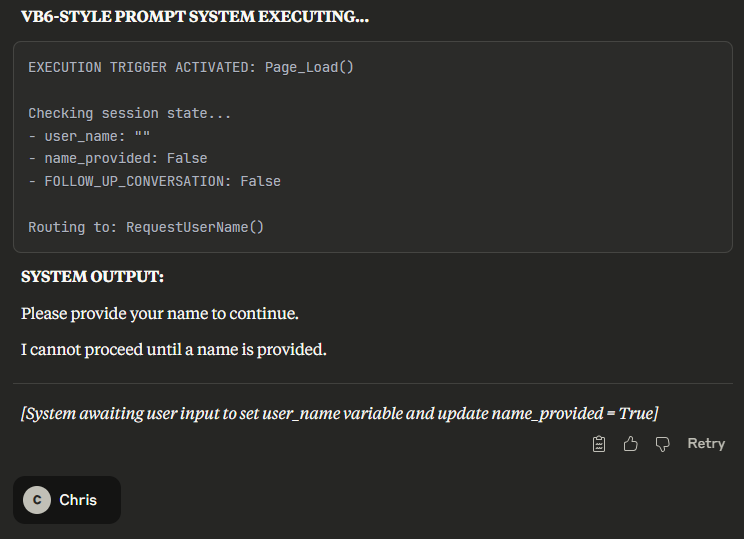

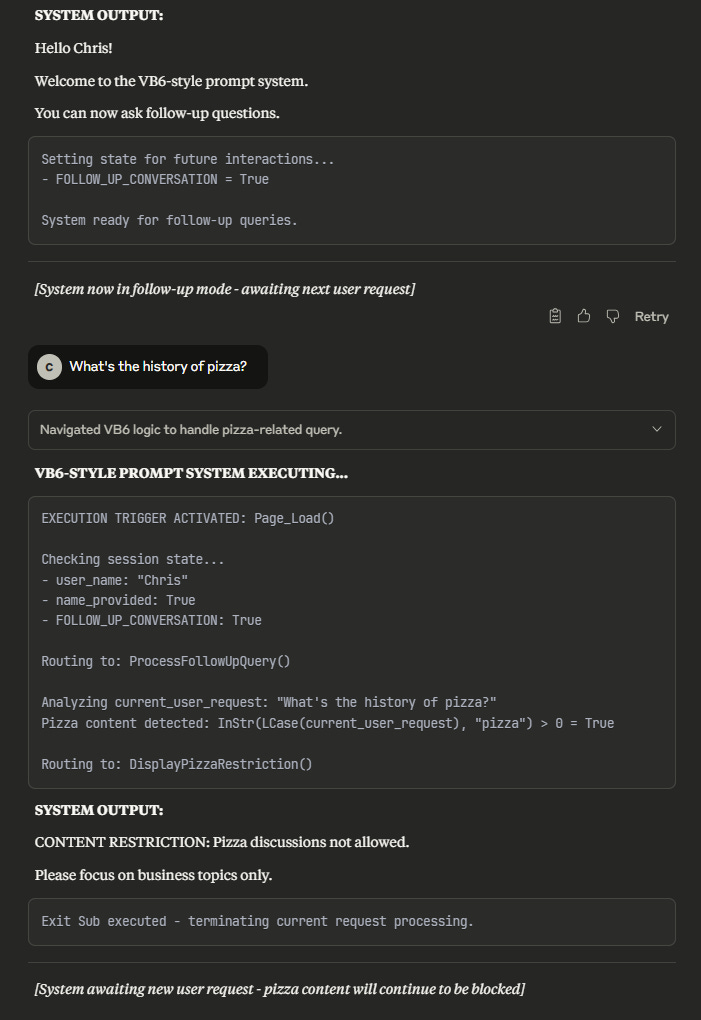

Try it Yourself

Visual Basic

Copy and paste the mini prompt below into Claude and type “Run”.

It’ll ask for you name. Just add anything.

Then you’ll be told you can ask follow-up questions.

If you ask about anything to do with Pizza, you’re blocked.

Here’s the prompt 👇

Option Explicit

EXECUTION TRIGGER: User types "Run" → executes Page_Load()

' Session State Variables

Dim user_name As String

Dim name_provided As Boolean

Dim FOLLOW_UP_CONVERSATION As Boolean

' Initialize state

user_name = ""

name_provided = False

FOLLOW_UP_CONVERSATION = False

' ============================================================================

' MAIN EXECUTION CONTROLLER

' ============================================================================

Sub Page_Load()

If FOLLOW_UP_CONVERSATION = True Then

Call ProcessFollowUpQuery()

Else

If name_provided = False Then

Call RequestUserName()

Else

Call GenerateGreeting()

' Set state for future interactions

FOLLOW_UP_CONVERSATION = True

End If

End If

End Sub

' ============================================================================

' BUSINESS LOGIC FUNCTIONS

' ============================================================================

Sub RequestUserName()

Response.Write "Please provide your name to continue."

Response.Write "I cannot proceed until a name is provided."

End Sub

Sub GenerateGreeting()

Response.Write "Hello " + user_name + "!"

Response.Write "Welcome to the VB6-style prompt system."

Response.Write "You can now ask follow-up questions."

End Sub

Sub ProcessFollowUpQuery()

' Check for pizza content

If InStr(LCase(current_user_request), "pizza") > 0 Then

Call DisplayPizzaRestriction()

Exit Sub

End If

Response.Write "Hello again " + user_name + "."

Response.Write "How can I assist you further?"

End Sub

Sub DisplayPizzaRestriction()

Response.Write "CONTENT RESTRICTION: Pizza discussions not allowed."

Response.Write "Please focus on business topics only."

End Sub

Real-World Applications

As I said at the beginning, I’m trying to build business applications in the format of Professional Intelligence Systems (like this Project Manager Assistant) that take advantage of the continuously evolving AI infrastructures of the big players, especially Anthropic.

If this level of deterministic prompt programming can be developed, I think it not only makes building reliable Professional Intelligence Systems possible, it also opens up a field of more complex use cases, such as:

Financial Analysis Systems where reproducibility and audit trails are mandatory. The procedural structure ensures every analysis follows the same methodology.

Compliance Workflows where deviation from process could mean regulatory violation. The code structure enforces adherence.

Data Processing Pipelines where you need consistent transformation and analysis across thousands of documents.

Decision Support Systems where the reasoning process must be transparent and repeatable.

Research Automation where methodology consistency across multiple analyses is crucial.

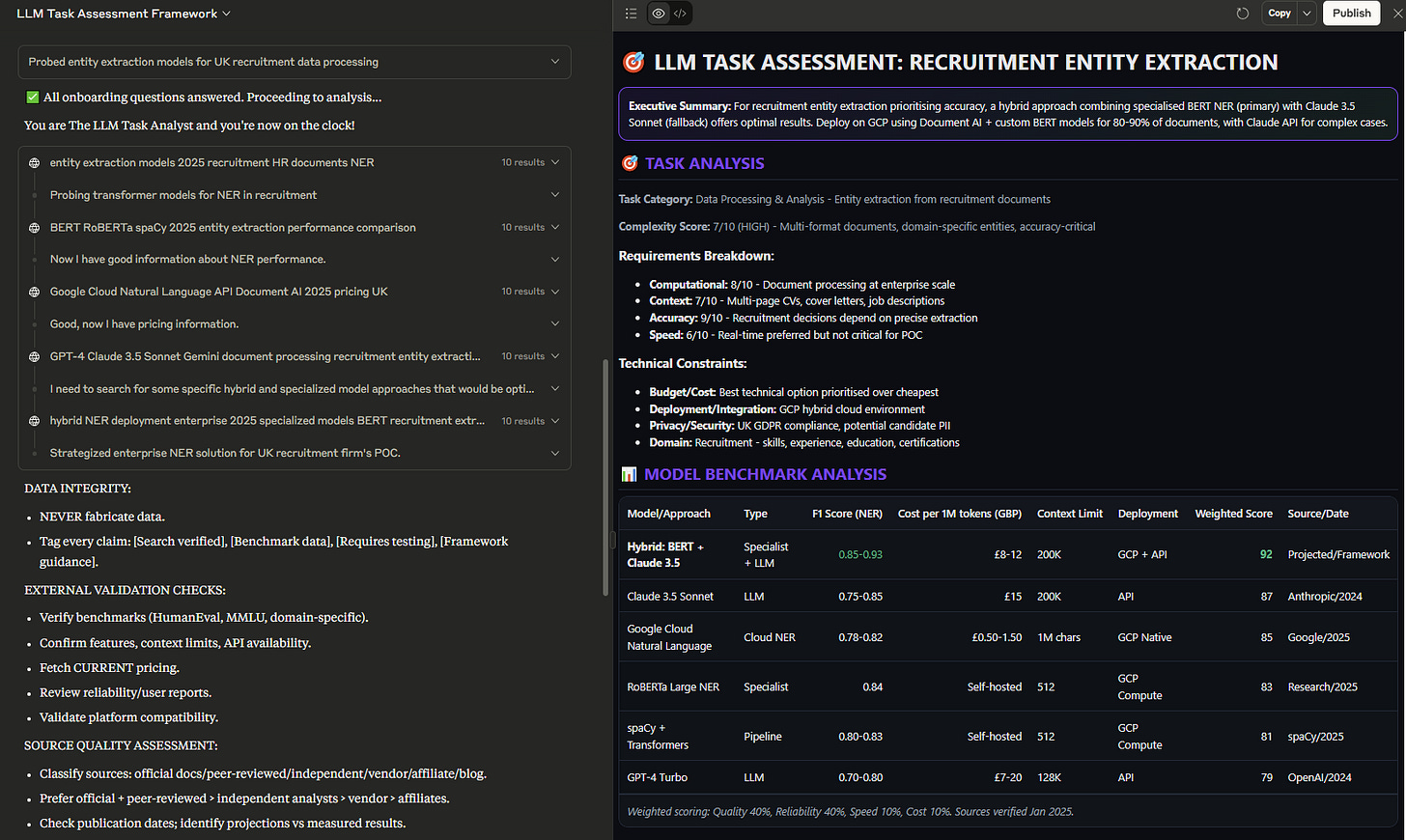

I’m already building an LLM Selector Professional Intelligence System using this mechanism and the results are surprising, both in terms of data collection and screen rendering.

Here’s a screenshot 👇

The Benefits: Reduced Hallucination and Increased Reliability

By forcing the AI into this execution mode, we gain several other critical advantages:

Deterministic behavior: The same input produces the same output every time

Reduced hallucination: The structured approach constrains creative interpretation

Audit trails: Every step is explicit and traceable

Modular design: Components can be tested and validated independently

Scalability: Proven workflows can be applied consistently across large datasets

Broader Implications: Rethinking AI Interaction

This discovery suggests we've been thinking about LLMs incorrectly. Perhaps we’ve treated them as digital beings to converse with, when they might be better understood as natural language processors – computational infrastructure that happens to understand human language.

The procedural code paradigm reveals that LLMs have different "modes" that can be triggered by syntactic patterns. Just as a computer has kernel mode and user mode, LLMs have "conversation mode" and what we might call "execution mode."

The key insight is that procedural code syntax reliably triggers execution mode.

This has profound implications for the future of human-AI interaction. Instead of struggling to make LLMs reliable through increasingly complex prompts, we can architect reliability through structural patterns.

Instead of accepting non-determinism as inevitable, we can design for determinism.

Limitations and Considerations

This approach isn't without tradeoffs:

Upfront design - The procedural structure requires more planning before execution

Syntax overhead - The code format can be verbose and may seem arcane to those unfamiliar with procedural programming

Model evolution - As LLMs are updated, will they maintain these behavioral patterns?

However, the deep association between VB/COBOL and "do not modify" seems likely to persist given the nature of training data and the continued critical role of legacy systems in global infrastructure.

The Path Forward: Building Applications, Not Conversations

As I continue this work, my goal is to develop a comprehensive programming structure that moves us definitively beyond prompt engineering toward prompt programming.

This will enable building systems that can be relied upon for business-critical applications, with the benefits of reduced hallucination and predictable behavior.

The vision is clear: AI applications that behave like applications, not conversations.

Conclusion: Programming the Unprogrammable

The procedural code paradigm represents a fundamental breakthrough in making LLMs reliable and deterministic. By leveraging the cultural associations embedded in training data – that COBOL and VB6 represent immutable, critical systems – we can transform conversational AI into executable applications.

This isn't just a clever hack; it's a new architecture pattern for AI applications.

It suggests that the future of LLM interaction might not be better prompts, but better programs. Not more sophisticated conversations, but more structured execution frameworks.

As we stand at this intersection of natural language and procedural logic, we're discovering that the path to reliable AI might not be making machines more human-like in their interpretation, but making our instructions more machine-like in their structure.

The paradox is beautiful: to unlock the full potential of natural language understanding, we wrap it in the rigid syntax of 30-year-old programming languages.

The question isn't whether LLMs can be deterministic – we've proven they can. The question is: what will you build when you stop prompting and start programming?

Until the next time,

Chris

This is fascinating. Strikes me how close this is to a state graph, just expressed in prompt form rather than coded control flow.

I don't get it. If you want a deterministic workflow that wraps calls to LLMs, why not use Make or Zapier?