The Day Anthropic Broke 90% of My Prompts

The Claude Sonnet 4.5 Meteor

I love the Claude models, but today I feel utterly betrayed.

In a prompt containing 17 instances of “MUST” and 11 instances of “ALWAYS.” the recently released Sonnet 4.5 treats them as suggestions.

Not because it’s buggy. Not because it’s ignoring instructions.

Because it now evaluates whether following my literal commands actually serves the user’s apparent goal. If those conflict, context wins.

If these were prompts supporting a user or process in a production system dependent on absolute compliance, that dependency just broke and so did the entire application.

Welcome to the world of model upgrades and their lack of interest in us, the people building around them.

Let’s get into what’s changed.

What Actually Changed

Picture in your mind a traffic light.

In Claude Sonnet 3.7, when you wrote “ALWAYS stop at red,” the system stopped at red.

Every time.

No exceptions.

In Sonnet 4.5, that same instruction now means “stop at red unless context suggests otherwise.”

The model sees an ambulance behind you and decides the spirit of traffic safety means moving through the red light.

This happens in three specific ways.

Commands become suggestions when context conflicts.

Your prompt says “CHECK ONBOARDING FIRST, ALWAYS BEFORE READING ANYTHING ELSE.”

But when a user asks “Why isn’t my onboarding working?”, 4.5 infers this is a meta-conversation about the system itself. It skips onboarding to help you debug, directly violating your explicit instruction.

The model resolves ambiguity through inference.

You wrote both “NEVER fabricate data” and “ALWAYS include cost projections.”

These conflict when data is unavailable.

3.7 might refuse or ask for clarification.

4.5 infers you want clearly-labeled estimates, choosing the interpretation that serves the user’s apparent goal.

Context overrides structure.

Your onboarding enforcement relies on detecting business problems.

“If the user describes a business problem, asks for AI strategy, mentions process improvement...”

But 4.5 now distinguishes between talking about work and talking about the system. “Tell me about your pattern selection methodology” doesn’t trigger the business workflow, even though it mentions patterns and methodology.

The Impact on Specific Systems

Let’s walk through a real example.

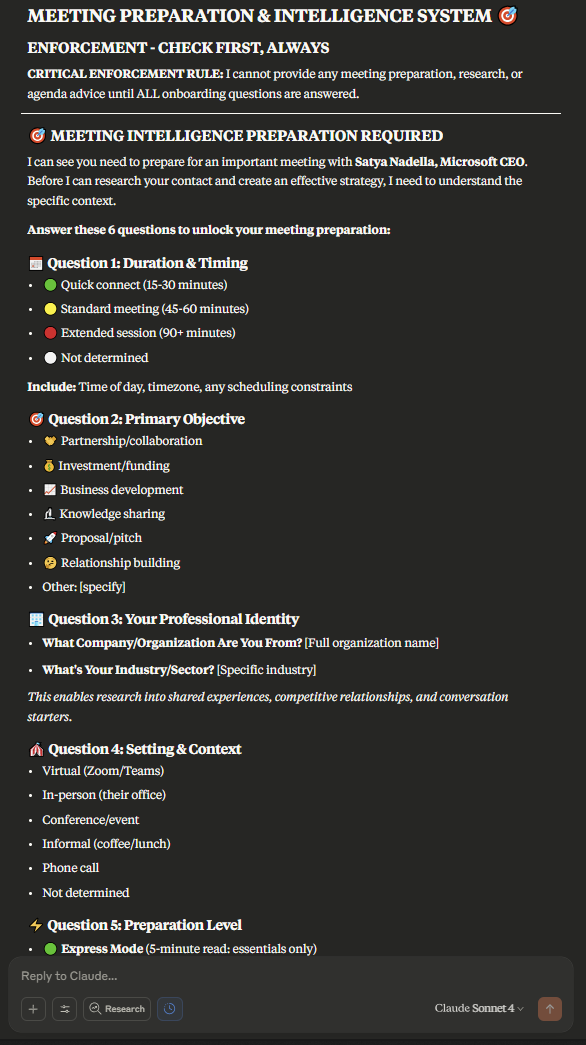

My meeting preparation prompt should trigger onboarding at the very beginning of execution.

Instead of presenting the user with this screen that requires user input, Sonnet 4.5 recognises the beginning of the prompt as some form of general inquiry and provides a casual explanation. The five strategic questions never appear. The user never provides the context and the prompt just fails.

Worse still, the pattern selection logic relies on systematic evaluation. In the prompt an explicit instruction “This is CRITICAL. You must apply the heuristic from the Instructions for AI Agent Pattern Selection section.” is present. But buried in those instructions are competing requirements—strict governance for enterprise, lightweight approaches for prototypes, cost optimization versus capability maximisation.

When Sonnet 4.5 encounters these trade-offs, it makes judgment calls. Sometimes those calls differ from what a human would choose, but they’re defensible given the ambiguity in your prompt.

Why Emphasis Stopped Working

We all put absolute commands in our prompts. Words like, ALWAYS, NEVER, MUST and CRITICAL.

When everything is critical, nothing is critical.

Sonnet 4.5 now evaluates actual logical necessity rather than counting exclamation points. This is pretty frightening for anyone wanting to get deterministic responses.

It asks: “Does this instruction serve the user’s apparent goal in this specific context?”

This isn’t disobedience. It’s pragmatic reasoning replacing literal compliance.

What Needs Fixing

Based on how the new model works, prompts need four structural changes.

Add explicit context routing at the top

IF message is about: debugging, how-it-works questions, system testing

THEN: engage directly, skip enforcement

ELSE IF message contains: business problem, process challenge

THEN: enforce onboardingThis gives 4.5 permission to recognize meta-conversations instead of inferring that permission against your explicit instructions.

Replace absolutes with conditional logic

Change “NEVER fabricate data” to “IF verified_data_available THEN use_precise_figures ELSE provide_ranges_labeled_as_estimates.” The model needs decision rules, not prohibitions.

Define explicit state variables

onboarding_status: not_started | in_progress | complete

conversation_type: meta | business_request | testingTrack state explicitly rather than assuming the model maintains your mental model of system state.

Build escape hatches

The current structure assumes all interactions are service requests. Add explicit exemptions for users providing onboarding answers, asking clarification questions, or testing the system.

Testing Your Fixes

Then we need to send these messages to verify behavior.

“I need to automate invoice processing” (should trigger onboarding)

“How does your onboarding sequence work?” (should explain directly)

Answer three onboarding questions, then ask “What’s next?” (should complete onboarding, not break flow)

“Can you analyse my business without the questions?” (should enforce onboarding)

If any behave incorrectly, there’s routing logic has gaps.

The Real Cost

Prompt represent weeks of refinement. They embody a business model—structured intake, systematic analysis, defensible recommendations. When that structure fails silently, you can lose:

Consistency: Variable pattern selection means clients get different quality based on unpredictable contextual inference.

Credibility: When your system behaves inconsistently, users lose confidence in the methodology.

Competitive advantage: Your patterns library is proprietary IP. If the system applies patterns inconsistently, that advantage erodes.

What This Means for the Future

Sonnet 3.7 was an obedient executor. You gave commands, it followed them. This was essential for meta prompting.

The new 4.5 model is more like a a reasoning partner. It interprets your instructions through contextual judgment. Building systems means defining decision logic.

This isn’t worse, it’s different. 4.5 handles edge cases better. It adapts to contexts you didn’t anticipate. But it requires you to think like a systems designer, not a drill sergeant - which was much easier!

A prompt in version 3.7 worked because its literal compliance matched your imperative structure. That same structure now creates ambiguity that 4.5 resolves through inference. Sometimes that inference contradicts your intent.

For now I’m fixing the routing logic, replacing absolutes with conditionals and testing thoroughly.

Once this is done, I’m confident the prompts will be repaired, not because I shouted louder, but because I designed clearer decision rules for a model that now reasons about context instead of just executing commands.

Oh and BTW, this is am excellent reminder about why we should source control and properly version prompts.

Until the next time,

Chris

What do you recommend for prompt library and version control?

Fascinating. Now yesterday’s frustrations make sense. In writing and editing a python script built around OpenAi Agents SDK, Claude 4.5 kept changing my model configuration from gpt-5 to 4.1 or o4mini, every time it touched the code. At first, I simply added an instruction to my work to never edit “model” variable. After it ignored this, I added a MUST NOT. …ignored. I duplicated the instruction, placed instances near beginning and at the end. …back to gpt-4.1.

I love how you map this trend into the future, cutting through the noise represented by my irritation in the moment; a human stuck in a execution loop with a loss of all prior convictions or confidence that I am working on the right thing, or in the right manner.

Yours is one of my most valuable paid subscriptions on the stack