Claude Desktop Just Replaced My Monday Planning Session

What Changes When Conversational AI Becomes a Tool and Not a Toy

Here’s the most common question I get about using AI for actual work: “I tried having Claude help me plan my workload, and it gave me really smart suggestions, but then I still had to spend an hour actually implementing everything. What’s the point?”

That question reveals the fundamental problem with how most people are using AI right now. They’re stuck at the insight layer. They get helpful analysis, smart recommendations, clever reorganisations of their task lists - but the AI can’t (without paying for access to an automation service) actually touch their work systems.

It can’t book the meeting.

It can’t draft the email in Gmail.

It can’t create the calendar block.

So you end up with a sophisticated advice-giver that makes you feel briefly organised, then leaves you with all the tedious implementation work that took you 90 minutes instead of 2 hours.

Marginally useful. Definitely not transformative.

The breakthrough isn’t better AI or smarter prompts. It’s crossing a specific architectural threshold that most people haven’t recognised yet: moving from AI that provides intelligence to AI that executes structured actions against your actual work systems.

Let’s get into it.

The Conversational AI Ceiling

Here’s what conversational AI does exceptionally well: I can paste my messy TODO list into Claude and ask “help me prioritise this week.”

Within seconds, I get intelligent analysis—which tasks (it thinks) are urgent, which require deep focus, which are just admin overhead.

It’s genuinely helpful. I nod along. I feel more organised.

Then I close the chat and... nothing has actually changed.

My calendar is still empty.

My emails aren’t drafted.

My Notion workspace doesn’t know about any of this planning.

The insight evaporates the moment I switch contexts to actually implement anything.

This is the toy problem: conversational AI gives you intelligence without execution. It’s like having a brilliant consultant who can’t actually touch your systems.

What Changes at the Tool Threshold

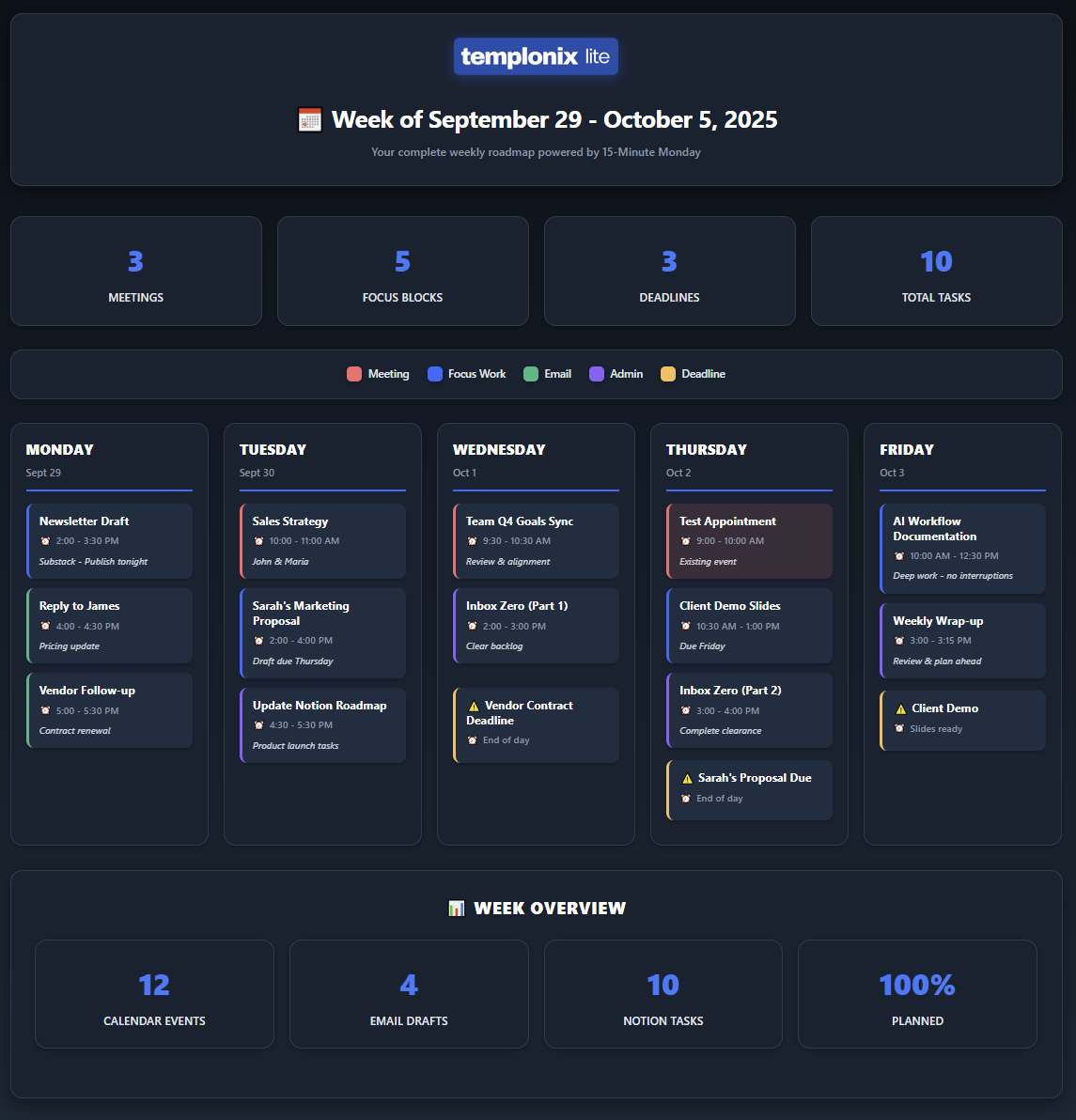

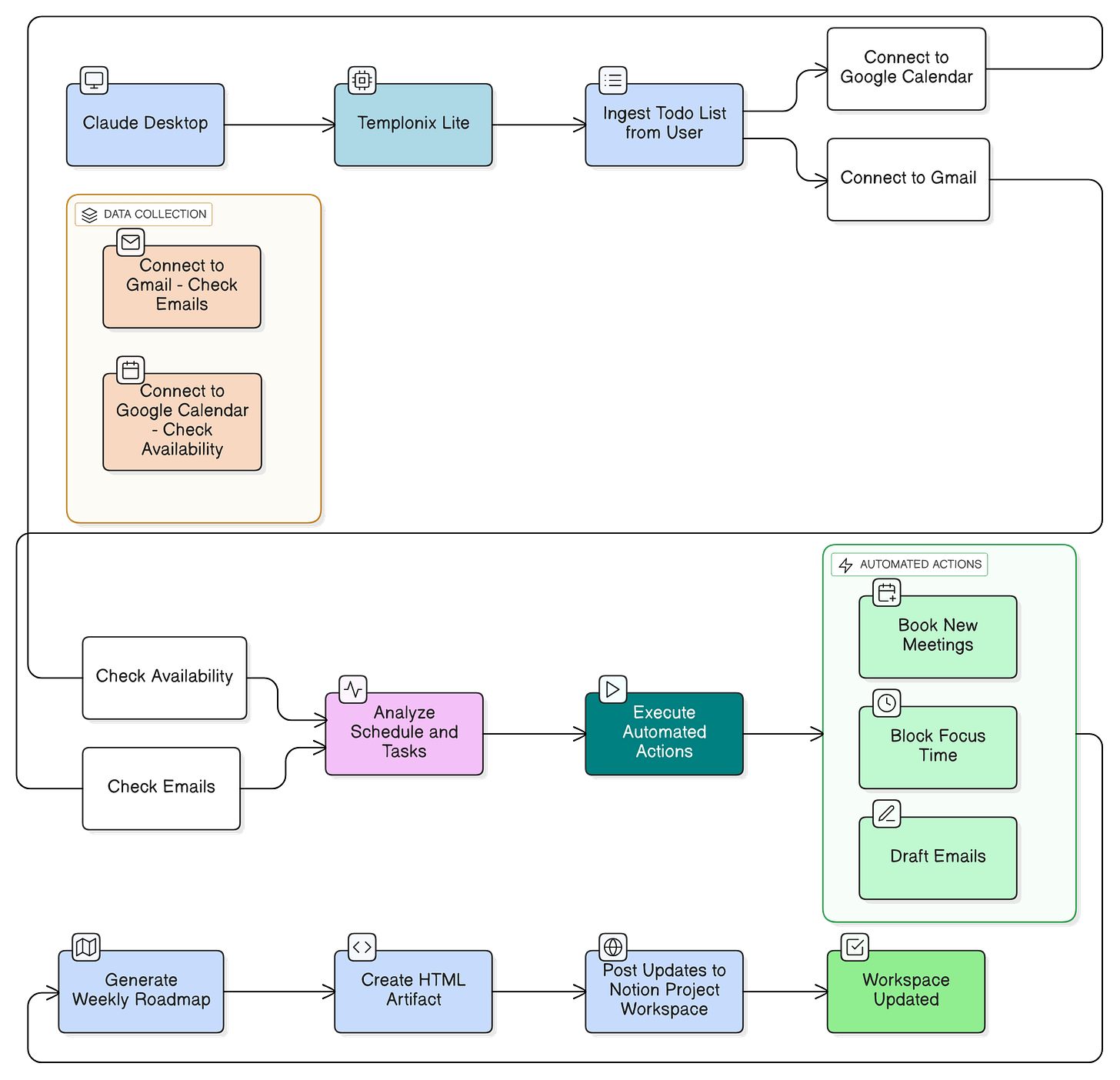

Since mid-summer, I’ve been building Templonix Lite (still experimental, still in development) - a locally deployed AI solution that uses Claude Desktop and the Model Context Protocol (MCP).

The goal?

To see if I can merge workflows/prompts and tools in a way that makes as much use of existing infrastructure as possible (which means no additional costs), whilst making the user genuinely more productive.

This week, I tested a specific workflow I’ve been developing.

Instead of Claude just analysing my TODO list, I gave it the ability to:

Check my actual Google Calendar for conflicts

Add appointments/focus time to my calendar for me

Draft emails in my actual Gmail

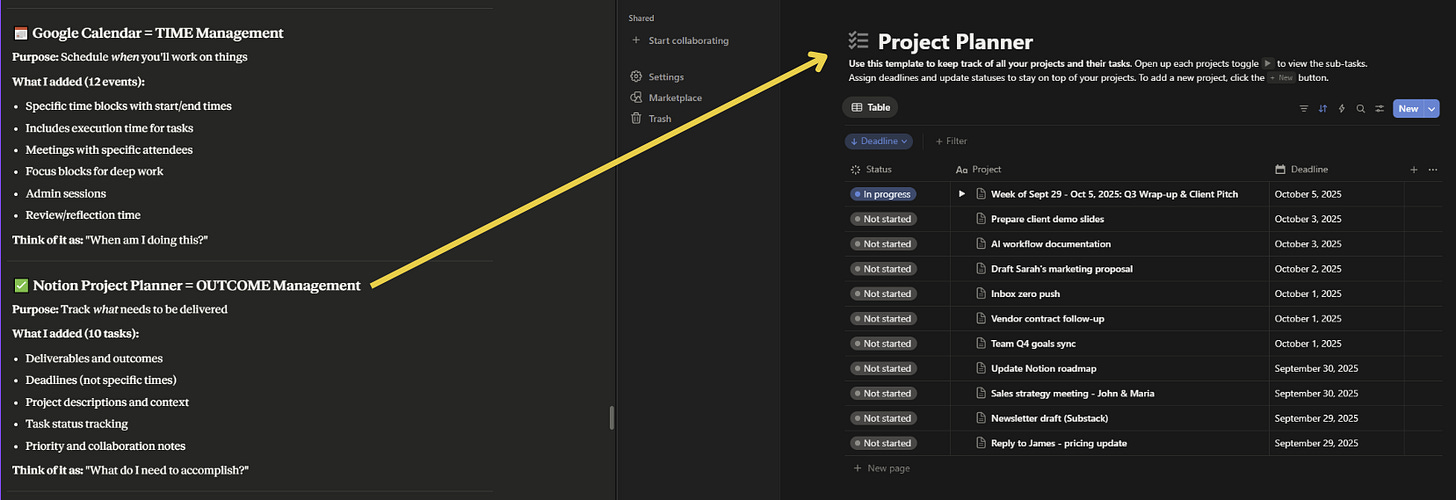

Create tasks in my actual Notion workspace

Generate a visual roadmap I could reference all week

The intelligence was the same.

The conversation was similar.

But the outcome was fundamentally different because the AI could touch my actual work systems.

The 15-Minute Experiment

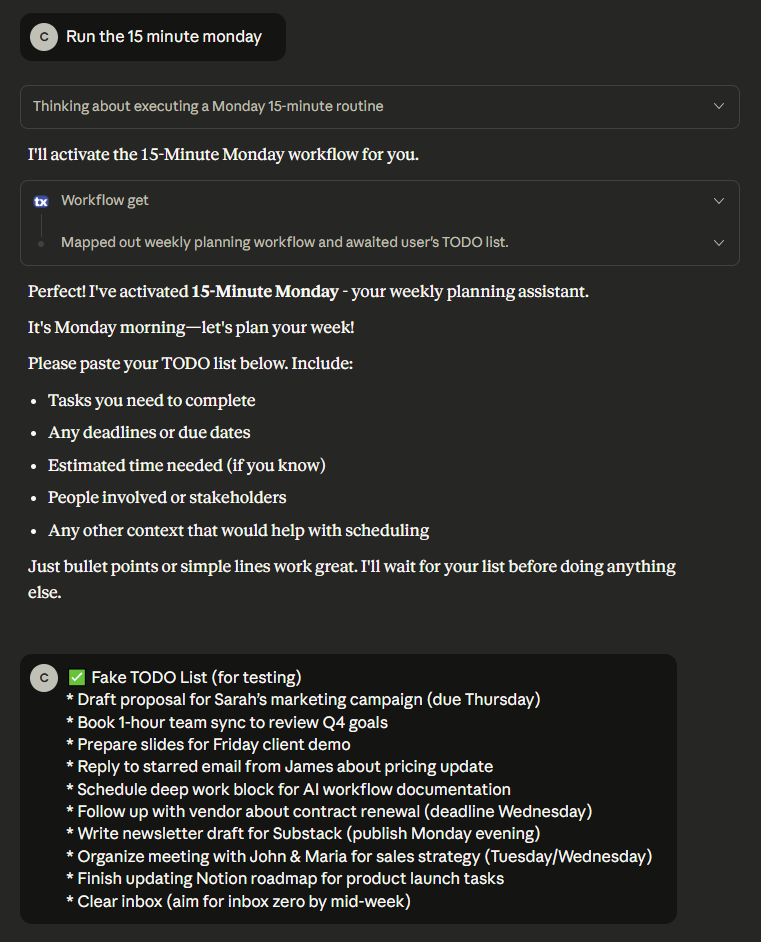

I dumped my raw TODO list into Claude—ten items, no structure, no priorities.

I said “Run the 15 minute monday” (a workflow I’ve been testing).

What happened next revealed something important about the boundary between AI-as-toy and AI-as-tool.

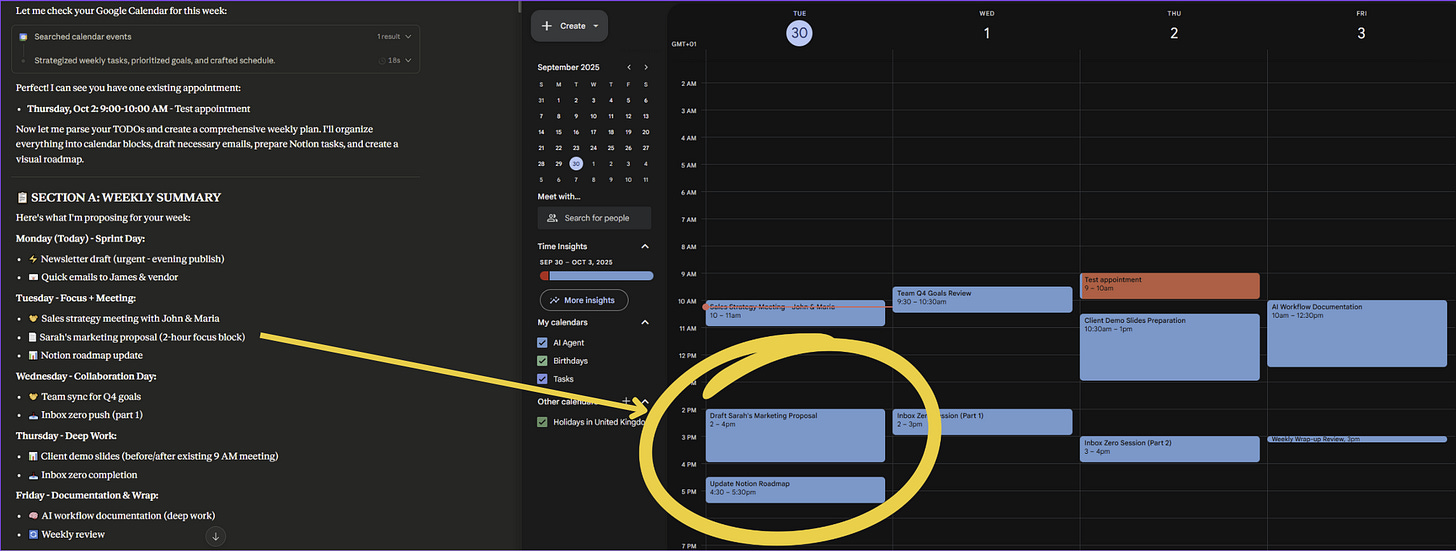

The conversational part took about 2 minutes. Claude analysed the list, identified meetings versus focus work versus emails, noted deadlines, understood dependencies. This is what AI does well.

The execution part took another 5 minutes as it was accessing API endpoints.

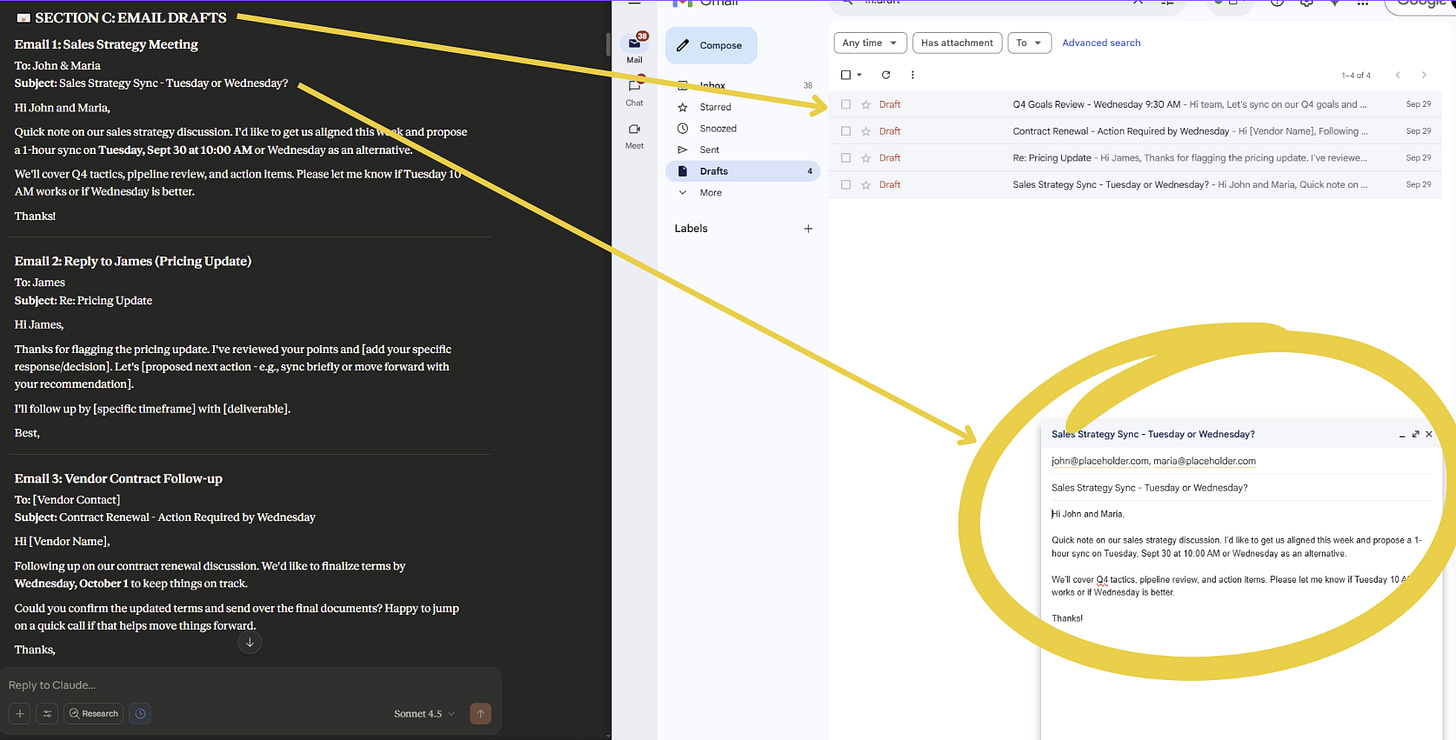

Claude created 12 calendar events.

Wrote and saved 4 email drafts to Gmail.

Added 10 tasks to Notion with full context.

And generated a dashboard.

This is what make all the difference.

The difference wasn’t smarter AI.

It was AI with structured workflows and system access.

What This Reveals About When to Use AI

Building this experiment has clarified something I hadn’t articulated before: there are really two questions about AI utility that people conflate.

When should I use AI?

When the bottleneck is intelligence, not execution.

If you’re stuck on “what should I prioritise,” conversational AI helps immediately.

If you’re stuck on “I need to actually schedule these meetings,” conversational AI just gives you more thinking to do.

What should AI actually do?

Not everything.

Not autonomously.

The workflow I built doesn’t make decisions about what goes on my calendar—it executes the decisions I’ve already made by translating my TODO list into scheduled blocks, drafted communications, and tracked tasks.

The AI handles the tedious translation work between “here’s what needs to happen” and “here’s when/how it will happen.”

That’s the zone where tools become valuable.

The Architecture That Makes This Work

I’m still experimenting with this, but here’s what seems to matter.

Structured workflows instead of open-ended conversations

When I say “run the 15 minute monday,” Claude knows exactly what sequence of steps to execute. It’s not improvising - it’s following a pattern I’ve defined.

It also knows which tools to use. This is key. When you have a workflow prompt that contains the rules and templates to follow for a process, you only need to give the AI the minimal instruction to kick that process off.

Read-write access to actual systems

The breakthrough isn’t that Claude can read my calendar—it’s that it can write to it. Create events. Draft emails. Add tasks.

The intelligence becomes useful when it can take action.

Human approval before execution

The workflow shows me everything it plans to do—all 12 calendar events, all 4 email drafts, all 10 Notion tasks.

I review and approve before anything touches my real systems.

This is critical.

The AI isn’t autonomous; it’s accelerating execution of my decisions.

The bonus

All this runs from my laptop.

There’s no automation API calls in here. No Zapier. No n8n. No additional costs.

Why This Matters Beyond My Specific Setup

I’m building Templonix Lite precisely because I think this architectural pattern matters more than the specific implementation. You don’t need my exact workflow to benefit from understanding the principle.

The insight is this: conversational AI hits a ceiling when conversation is all it can do. The value multiplies when you give it structured workflows and controlled system access. Not because it makes better decisions, but because it eliminates the friction between decision and execution.

Most people are still using AI the way they use search engines—ask question, get answer, manually implement.

That’s fine for many use cases.

But there’s an entirely different utility curve when you move from “AI that explains” to “AI that executes.”

What I’m Learning By Building This

The Monday morning experiment worked better than expected. The week was planned in way less than 15 minutes instead of the usual scattered planning sessions one might expect to conduct.

But more importantly, it revealed which parts of the workflow actually benefit from AI execution versus which parts still need human judgment.

AI should handle - Checking calendar conflicts, drafting standard emails, creating task structures, generating visual overviews. These are tedious but deterministic.

I should handle - Deciding what’s actually important, approving the plan before execution, customising communications, evaluating whether the system got it right.

This boundary between AI-appropriate tasks and human-appropriate tasks only became clear by actually building the workflow and using it. Reading about it wouldn’t have revealed the same insights.

If you’re using Claude or ChatGPT purely conversationally, you’re probably experiencing the same ceiling I hit. Great insights, helpful analysis, but everything still requires manual implementation.

The question worth asking isn’t “how do I get better AI answers?”

It’s “what would change if my AI could actually touch my work systems with structured workflows?”

You don’t need my specific implementation to experiment with this principle.

You might build something completely different.

The insight is just recognising that there’s a threshold between conversational intelligence and executable utility—and crossing that threshold requires architecture, not just better prompts.

That’s what I’m learning by building this. Not that my specific Monday workflow is the right answer, but that the question of “when does AI become a tool instead of a toy” has a surprisingly concrete answer: when it can execute structured workflows against your actual work systems, with your approval.

Until next time,

Chris