Beyond the Hallucination - The Prompt That Forces AI To Be Sure

How to build a fact-checking engine into your prompts and get AI-generated answers you can actually trust.

We’ve all felt it. That flicker of doubt.

You’ve just received a perfectly written answer from an AI.

It’s coherent, confident, and detailed.

But as you prepare to copy it into your report, a nagging question stops you: “Is this actually true?”

So begins the tedious, manual dance of verification. You start Googling key phrases, trying to find the original source.

You open a dozen tabs, cross-referencing dates and figures, hoping to confirm the AI’s claims.

An hour later, you’re either mired in conflicting information or, worse, you’ve found that a critical “fact” was a complete fabrication—a hallucination.

This isn’t just a technical problem; it’s a credibility crisis. In a world running on information, using unverified AI output is like navigating a minefield blindfolded. Decisions that impact your revenue, strategy, and reputation are being based on a black box that, for all its brilliance, sometimes just makes things up.

There is a better way.

From Digital Parrot to Diligent Researcher

What if, instead of asking your AI to simply give you an answer, you could instruct it to prove it?

What if you could embed the rigorous process of a professional fact-checker directly into your prompt?

That’s the principle behind the Fact-Checking Research Assistant, a meta prompt that transforms a standard LLM from a conversationalist into a systematic research analyst.

It forces the AI to move beyond mere information retrieval and adopt a structured, three-step methodology: Research, Synthesize, and Verify.

This isn’t about adding “please check your sources” to the end of your query. It’s about programming a robust, auditable workflow in plain English.

The assistant doesn’t just find information; it interrogates it.

The Difference is in the Discipline

When you use a generic prompt, you’re asking for a summary. When you use the Fact-Checking Research Assistant, you’re commissioning a report. The distinction is critical.

Let’s say you ask a standard AI: “Are electric vehicles really better for the environment?”

You’ll likely get a well-written but generic summary mentioning battery production, emissions from electricity grids, and tailpipe emissions. It will sound plausible, but it will lack the crucial details: Which studies? How recent? Based on the grid in Germany or the United States? Can you trust the conclusion?

Now, let’s give the same query to the Fact-Checking Research Assistant.

Here’s what happens instead:

Initial Research & Diversification

The assistant first conducts a broad search, but with a crucial directive: prioritize authoritative sources like academic journals (e.g., Nature Sustainability), government reports (e.g., from the EPA or IEA), and reputable news outlets with strong journalistic standards. It deliberately gathers a range of sources to see the full picture.

Synthesis & Internal Mapping

It then drafts an initial answer, but internally, it maps every single factual claim to the source that supports it. For example, the claim “EV battery production accounts for 30-40% of its lifetime emissions” is linked directly to a specific IEA report.

Aggressive Verification

This is where the magic happens. The assistant critically reviews its own draft. It cross-references every major claim against at least two other independent, high-authority sources.

It actively looks for conflicts.

If one source claims a 10-year battery lifespan and another claims 15, it doesn’t just split the difference.

It notes the discrepancy, investigates further to find a consensus, and assigns a confidence score to the final output.

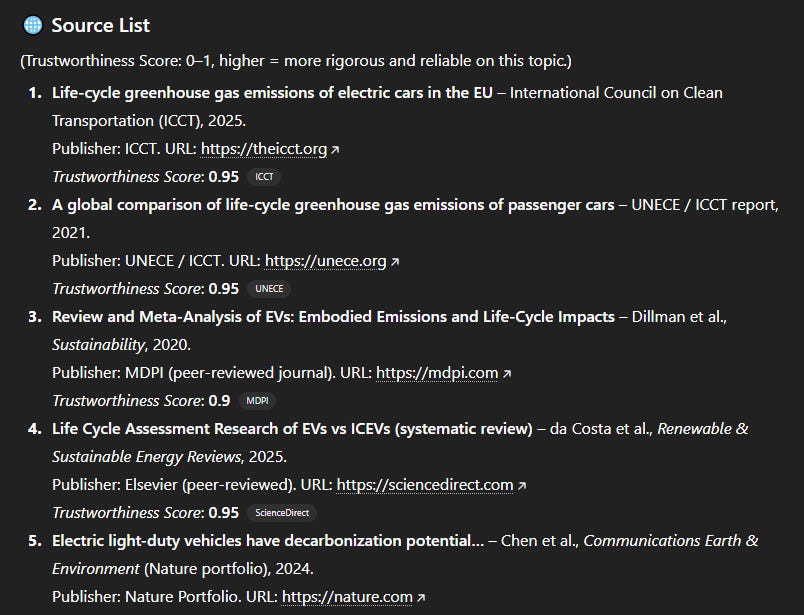

Instead of a vague paragraph, you get a structured intelligence brief with an executive summary, cited key findings, and a full source list with trustworthiness scores.

You see exactly where the information came from and how confident the assistant is in its accuracy. That’s the difference between a guess and an evidence-based conclusion.

Who This Is For

✅ Professionals and leaders who need to make decisions based on reliable data, not just plausible-sounding text.

✅ Analysts, consultants, and researchers who need to accelerate their workflow without sacrificing rigor or traceability.

✅ Students and academics who require properly sourced and cited information for their work.

✅ Anyone who has ever looked at an AI response and thought, “I hope this is right.”

Below, you’ll find the full prompt to turn your AI into a Fact-Checking Research Assistant.

Simply copy it, paste it into a new chat, and let the assistant guide you through the process of getting answers you can actually trust.