Write Your First AI Agent Business Case and Get It Approved

The 3-Pillar Strategy Blueprint You Can Use Today

This week I’ve been on the road. On Tuesday I watched a CTO present their company’s “autonomous AI agent” to a packed room. The demo was good. The agent handles customer queries, pulls data from three different systems, and generates responses that sound genuinely helpful.

Then someone at the back asks: “What happens when it makes a mistake? How do you roll back?”

Silence.

“Do you have prompt version control?”

“Well, we update the prompts in our codebase...”

“So you can’t demonstrate which version of your agent gave which advice to which customer?”

The session ended shortly after. I watched this pattern repeat again, different companies, same problem.

Great demos. No infrastructure to support them in production.

This isn’t my first article on AI business case development, but following what I witnessed this week, it’s time for a bit of a rethink. I’ve built a new capability mapping tool that forces very quick, honest assessment before you promise anything to your project sponsor.

If you’re writing an AI agent business case right now—or you’re about to—this will save you from the mistakes I watched play out in real time.

Let’s get into it.

The Pattern You Can’t Unsee

Today, there’s more “Agent Platforms” available to choose from than ever. Even if there’s largely no technical differentiation between any of them.

Their purpose? To help you build AI agents to automate complex workflows.

The reality? No memory, no vector databases, no state.

Each “agent” simply makes LLM calls inside a predefined workflow, pulling data from existing systems of record through an API.

It’s impressive engineering — but it’s not agentic.

It’s a stateless automation layer dressed in agentic branding. The agent doesn’t decide what to do; it executes what the workflow designer has already decided.

There’s no goal formation, no self-reflection, no adaptive planning. Just highly capable API orchestration with a language model in the middle.

And that’s the pattern I can’t unsee.

Across dozens of talks, the same story repeats: companies want the aura of agency without the infrastructure of it. They want “intelligent agents” that remember, reason, and adapt — but they build on top of systems that are fundamentally stateless.

This isn’t necessarily wrong. In fact, it’s the rational, low-risk move for most enterprises. But it explains why so many AI “agent” business cases fail before they even begin.

Most aren’t building agents. They’re extending automation — and confusing the two.

Once you see it, you start spotting it everywhere — from “AI copilots” in CRM systems to “autonomous assistants” in IT workflows. They’re all doing valuable work, but none of them are what the slide decks imply.

Which brings me to the real reason most business cases for AI agents fail: they start from the demo, not from the infrastructure.

Why Most Business Cases Fail Before You Write Them

The issue isn’t the writing. It’s building the business case backwards.

Here’s the pattern I see:

Someone sees an impressive demo — an “autonomous” agent running customer service interactions or reconciling invoices in seconds.

They imagine how that could transform their business.

They promise those outcomes internally.

Then they meet technical reality.

The project stalls, gets quietly shelved, or gets rebranded as a “pilot.”

The problem isn’t enthusiasm. It’s sequencing.

We start with what looks possible instead of what’s actually buildable.

Every successful AI business case I’ve seen starts from the opposite direction — infrastructure up, not imagination down.

Between “great idea” and “working product” there’s an unglamorous but vital step: the infrastructure audit.

Most teams skip it because it’s boring, technical, and full of awkward truths.

You can’t show it on a keynote slide. It doesn’t look like innovation. But it’s the single step that determines whether your agent project survives contact with reality.

Without that audit, you’re effectively writing fiction. You’re describing a system your company doesn’t yet have the parts to support.

You promise “learning from every interaction” — but there’s no vector store.

You promise “autonomous reasoning” — but there’s no state persistence or feedback loop.

You promise “enterprise-grade reliability” — but you don’t even have prompt version control.

These aren’t minor technicalities. They’re the difference between an LLM demo and a production system.

The Three-Pillar Blueprint

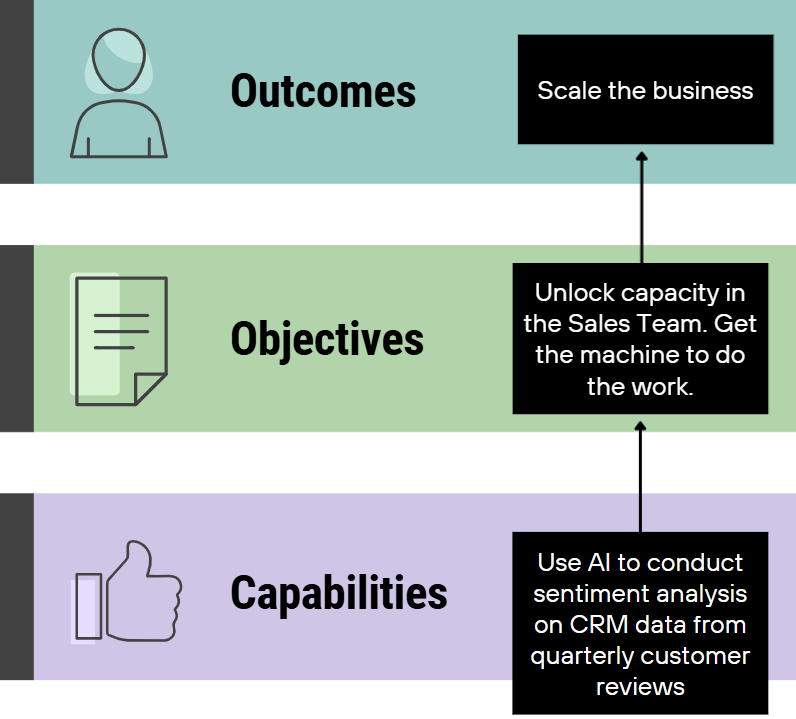

The easiest way to conceptualise what you need to do is with this simple bottom-up diagram.

We start with understanding the existing technology capabilities - what can we do with what we’ve got? Then we look at whether our objectives can be met by those capabilities. Finally, we map the objectives to the outcomes or value we’re trying to realise.

So if real agentic AI agents isn’t really what people want yet, how do you build a business case that still gets funded — and works?

Keep reading with a 7-day free trial

Subscribe to The AI Agent Architect to keep reading this post and get 7 days of free access to the full post archives.